QUOTE(skylinelover @ Jan 7 2015, 06:36 AM)

Nvidia (NASDAQ: NVDA; /?n'v?di?/ in-vid-ee-?) is an American global technology company based in Santa Clara, California. The company invented the graphics processing unit (GPU) in 1999. GPUs drive the computer graphics in games and in applications used by professional designers. Their parallel processing capabilities provide researchers and scientists with the ability to efficiently run high-performance applications, and they are deployed in supercomputing sites around the world. More recently, Nvidia has moved into the mobile computing market, where its processors power phones and tablets, as well as auto infotainment systems. Its competitors include Intel, AMD and Qualcomm.

The following are the most notable product families produced by Nvidia:

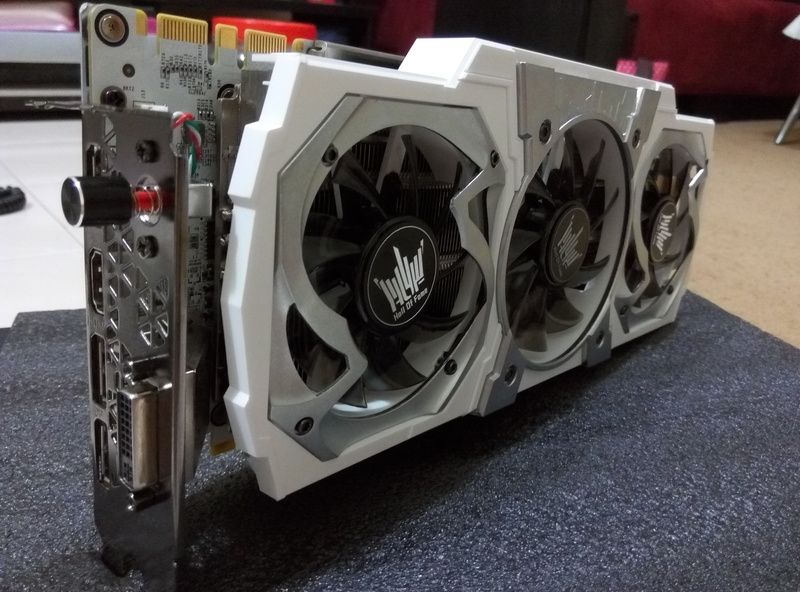

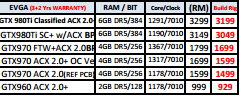

[.] GeForce - the gaming graphics processing products for which Nvidia is best known.

[.] Quadro - computer-aided design and digital content creation workstation graphics processing products.

[.] Tegra - a system on a chip series for mobile devices.

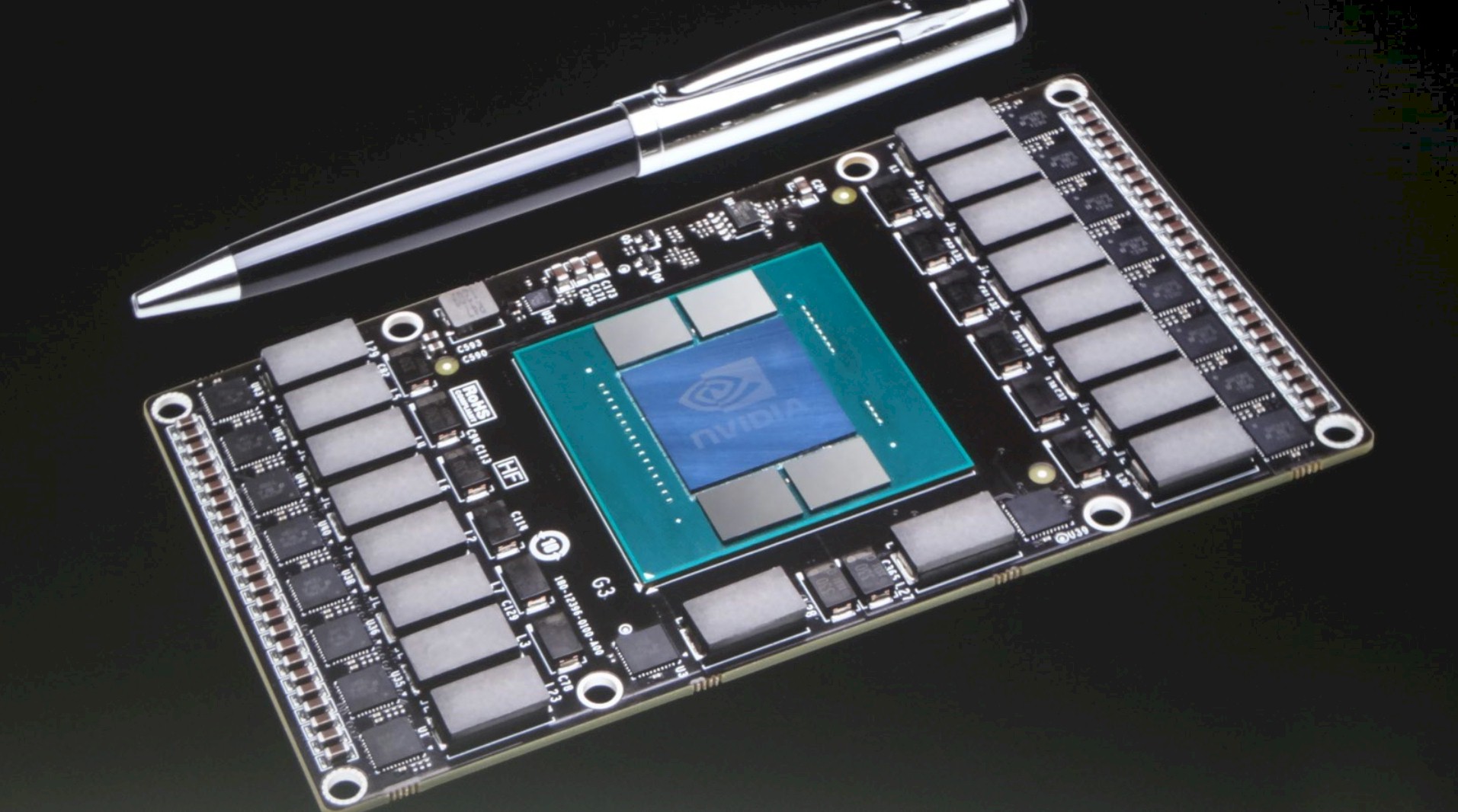

[.] Tesla - dedicated general purpose GPU for high-end image generation applications in professional and scientific fields.

[.] nForce - a motherboard chipset created by nVidia for AMD Athlon and Duron microprocessors.

FAST FACTS

> Founded in 1993

> Jen-Hsun Huang is co-founder, president and CEO

> Headquartered in Santa Clara, Calif.

> Listed with NASDAQ under the symbol NVDA in 1999

> Invented the GPU in 1999 and has shipped more than 1 billion to date

> 7,000 employees worldwide

> $4 billion in revenue in FY12

> 2,300+ patents worldwide

NVIDIA - http://www.nvidia.com/page/home.html

GeForce Drivers - http://www.geforce.com/drivers

Blog - http://blogs.nvidia.com

Jul 8 2015, 08:50 PM, updated 10y ago

Jul 8 2015, 08:50 PM, updated 10y ago

Quote

Quote

the box kinda retarded as well for missing the Q but its the same model

the box kinda retarded as well for missing the Q but its the same model

0.2117sec

0.2117sec

0.43

0.43

6 queries

6 queries

GZIP Disabled

GZIP Disabled