Powerful and Efficient for Professionals:The Ultra Slim 17.3" Mobile Workstation -WS72MSI Introduces new Ultra-Thin workstation laptop WS72 6Q series.

With an ultra-thin and light exterior appearance and aluminum chassis, WS72 measures less than 19.9mm thick and weighs only 2.55kg. It is definitely the world’s thinnest and lightest 17-inch ultra mobile workstation solution. This product is designed for all M&E (Multimedia & Entertainment) designers and CAD CAM engineers who want serious processing power on the go.

The new WS72 Mobile Workstations series is shipped with preloaded Microsoft Windows 10 Pro, while different models come with new line of NVIDIA mobile Quadro GPUs, include WS72-6QJ with M2000M, WS72-6QI with M1000M, and WS72-6QH with M600M graphics respectively.

The Quadro M2100M, M1100M, and M600M built on NVIDIA Maxwell chip architecture. Maxwell is NVIDIA's 10th-generation GPU architecture, following Kepler.This new generation of Quadro processors delivers incredible performance and power efficiency. It’s up to 2X faster than its predecessor, with up to 4GB of ultra-fast GDDR5 memory, the largest on a previous generation of mobile workstation. These are new Quadro mobile GPU products encompass the entire to mid-range segments of the professional graphics card market.

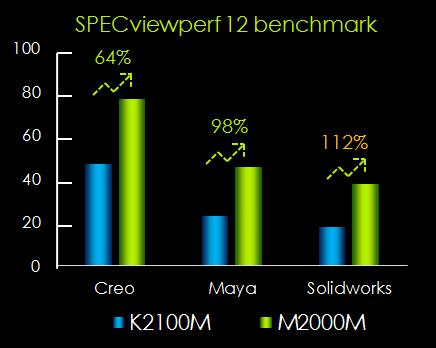

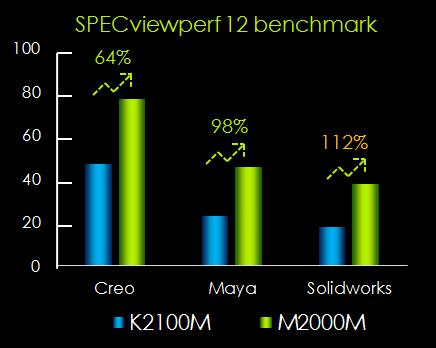

Let’s compare these three graphics performance individually with their previous generations at the same product position: Quadro M2000M with Quadro K2100M, Quadro M1000M with Quadro K1100M, and Quadro M600M with Quadro K620M.

The CUDA Cores number and memory interface determinate the final performance of a graphics card. If we look into the CUDA cores and memory interface design, the Quadro M2000M has 640 CUDA Cores with 128bit memory interface and 5000MHz GDDR5, but the Quadro K2100M has 576 CUDA Cores and the memory bandwidth is lower than M2000M.

The Quadro M1100M comes with 512 CUDA Cores, with 128bit memory interface and GDDR5 as well. However, the Quadro K1100M comes with only 384 CUDA Cores, 128bit memory interface of GDDR5 and 2800MHz GDDR5.

Let’s then take a look at Quadro M600M. It has 384 CUDA Cores and 128bit of GDDR5, which is the same as GTX960M, possessing 640 CUDA Cores and 128bit of GDDR5. The Quadro K620M has also 384 CUDA Cores, but is built in with lower 64bit memory memory interface of DDR3.

Check below image from NVIDIA Quadro Webpage. It shows the Quadro Graphics ‘ Maxwell and Kepler chip architecture

http://www.nvidia.com/object/quadro-for-mo...rkstations.html

SPECviewperf 12 is the latest version of the SPECviewperf benchmark released by the Standard Performance Evaluation Committee’s (SPEC) Graphics Performance Characterization (SPECgpc) working group. It replaces SPECviewperf 11, which was released in June 2010. SPECviewperf 12 includes updated versions of SPECviewperf 11 tests as well as new tests to simulate energy and medical applications. SPECviewperf 12 also includes the first DirectX test from the SPECgpc group.

In SPECviewperf 12, the Quadro M2100M, M1100M, and M600M outperforms the NVIDIA Quadro K2100M, K1100M, and K620M for PTC Creo 2.0, Autodesk Maya

2012 and Solidworks2013 tests.The results of the graphics composite scores for these benchmarks are listed in the chart belowix:

We present SPECviewperf 12 benchmark data below for your reference.

Powered by NVIDIA’s Quadro M-series, GPU up to M2000M graphics, the MSI WS72 mobile workstations provides great advantages of the state-of-the-art Maxwell architecture by the chip giant. Besides more energy efficient, challenging visualization workloads, such as 3D-modeling and rendering calculations, are to be taken care of in an effortless manner thanks to the 50% plus performance bump for more advanced architecture.

Jan 6 2016, 04:34 PM

Jan 6 2016, 04:34 PM

Quote

Quote

0.0331sec

0.0331sec

0.29

0.29

6 queries

6 queries

GZIP Disabled

GZIP Disabled