more nonsense that i like to read from the inquirer

Five models at launch, HD Audio and HDMI supported all the wayhttp://www.theinquirer.net/default.aspx?article=38923

Five models at launch, HD Audio and HDMI supported all the wayhttp://www.theinquirer.net/default.aspx?article=38923By Theo Valich: Saturday 14 April 2007, 08:32

AMD MADE a mess of its own naming conventions, because it has already launched rebranded RV510 and RV530/560 products as Radeon X2300

. So it decided to ditch the "X" prefix, which was introduced to mark the introduction of the PCI Express standard, even though many cards were shipped with an AGP interface.

The HD models really have a reason to be called that, since from RV610LE chip to R600 boards, the HD Audio codec is present, and HDMI support is native. However, there are some video processing differences, such as a repeat of RV510/530 vs. R580 scenario, albeit with a different GPU mix.

First of all, we have received a lot of e-mails from readers asking us about HDMI support and how that's done, since leaked pictures of OEM designs do not come with HDMI connectors, rather dual-link DVIs only. Again, we need to remind you of our R600 HDMI story, and that story is about elementary maths.

A single dual-link DVI connector has enough bandwidth to stream both video and audio in 1280x720 and 1920x1080 onto HDMI interface by using a dongle. On lower-end models, it is possible that the dongle will be skipped and that a direct HDMI connector will be placed onto the bracket.

The RV610LE will be known to the world as Radeon HD 2400 Pro, and will support 720p HD playback. If you want 1080p HD playback, you have to get a faster performing part. In addition, the HD2400Pro will be paired with DDR2 memory only, the very same chips many of enthusiasts use as their system memory - DDR2-800 or PC2-6400, 800 MHz memory. The reason for the 720p limitation is very simple - this chip heads against G86-303 chip with 64-bit memory interface, the 8300 series. It goes without saying that the RV610LE is a 64-bit chip as well.

The second in line is RV610Pro chip, branded Radeon HD 2400 XT. This pup is paired with GDDR3 memory and is the first chip able to playback Full HD video (1920x1080), thanks to the fact that this is a fully-fledged RV610 GPU, no ultra-cheap-64-bit-only-PCB. RV630 Pro is an interesting one. Formally named Radeon HD 2600 Pro, it sports the very same DDR2 memory used on HD 2400Pro and GeForce 8500GTs we have, but there are some memory controller differences that will be revealed to you as soon as we get permission to put the pictures online.

The RV630XT - the Radeon HD 2600 XT - is nearly identical to the Pro version when it comes to the GPU, but this board is a monster when it comes to memory support, just like its predecessor, the X1600XT. However, this is the only product in the whole launch day line-up that has support for both GDDR-3 and GDDR-4 memory types. Both GDDR-3 and GDDR-4 memory will end up clocked to heavens high, meaning the excellent 8600GTS will have a fearsome competitor.

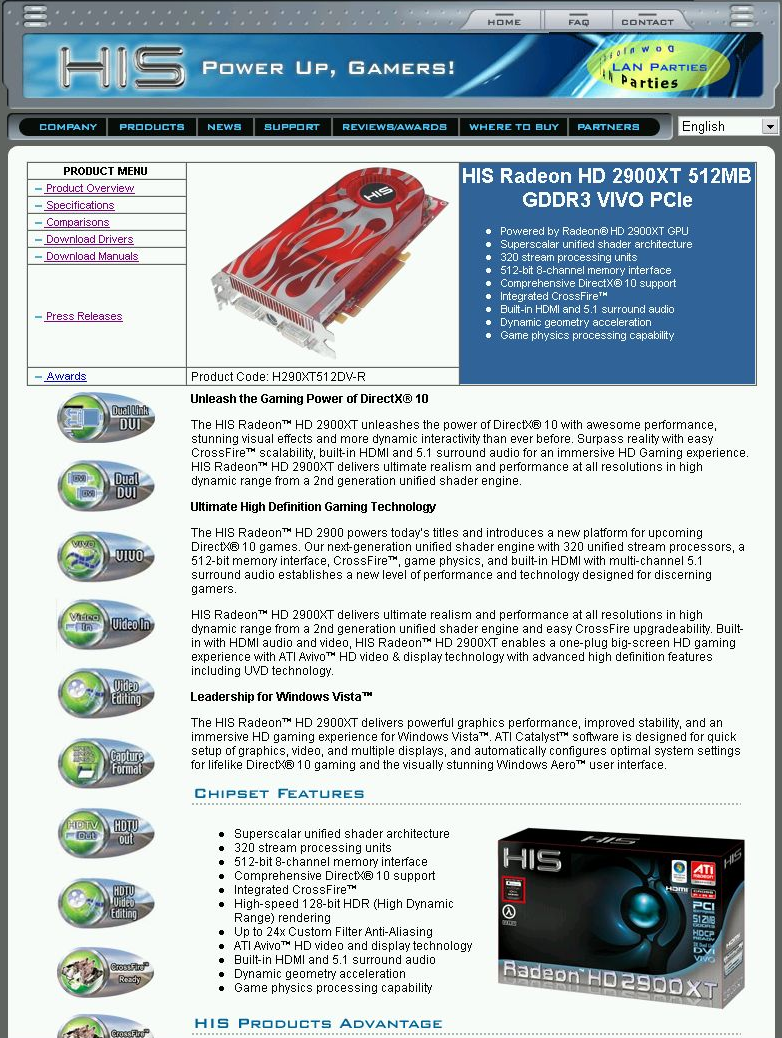

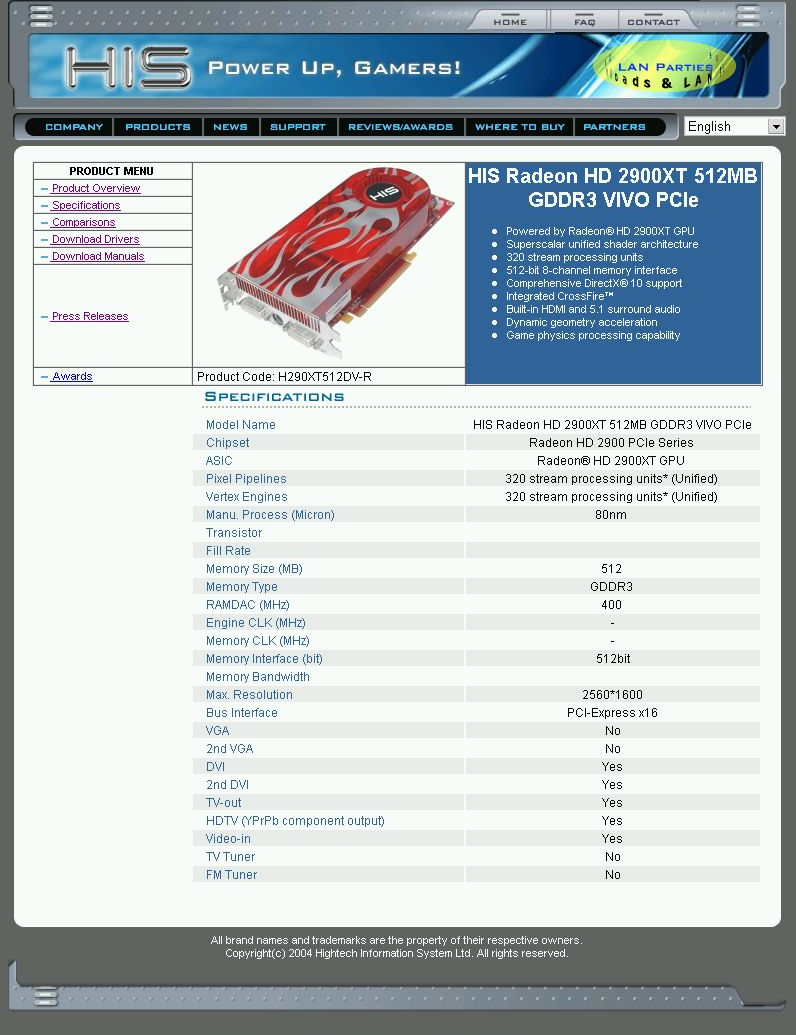

The R600 512MB is the grand finale. R600 is HD 2900 XT, as our Wily already disclosed. This board packs 512MB of GDDR-3 memory from Samsung, and offers same or a little better performance than 8800GTX, at a price point of 8800GTS. R600 GPU supports two independent video streams, so even a dual-link DVI can be done, even though we doubt this was high on AMD's priority list. This product is nine months late, and a refresh is around the corner, unless AMD continues to execute as ATi did.

The R600 1GB is very interesting. Originally, we heard about this product as a GDDR-4 only, and it is supposed to launch on Computex. We heard more details, and now you need to order at least 100 cards to get it, it will be available in limited quantities only. We expect that ATi will refrain from introduction until a dual-die product from nV shows up, so that AMD can offer CrossFire version with 2GB of video memory in total, for the same price as Nvidia's 1.5GB. Then again, in the war of video memory numbers, AMD is now losing to Nvidia flat-out.

AMD compromised its own product line-up with this 512MB card being the launch one, and no amount of marketing papers and powerpointery can negate the fact that AMD is nine months late and has 256MB of memory less than a six month old flagship product from the competitor. µ

http://www.dailytech.com/article.aspx?newsid=6903320-stream processors, named ATI Radeon HD 2900AMD has named the rest of its upcoming ATI Radeon DirectX 10 product lineup. The new DirectX 10 product family received the ATI Radeon HD 2000-series moniker. For the new product generation, AMD has tagged HD to the product name to designate the entire lineup's Avivo HD technology. AMD has also removed the X-prefix on its product models.

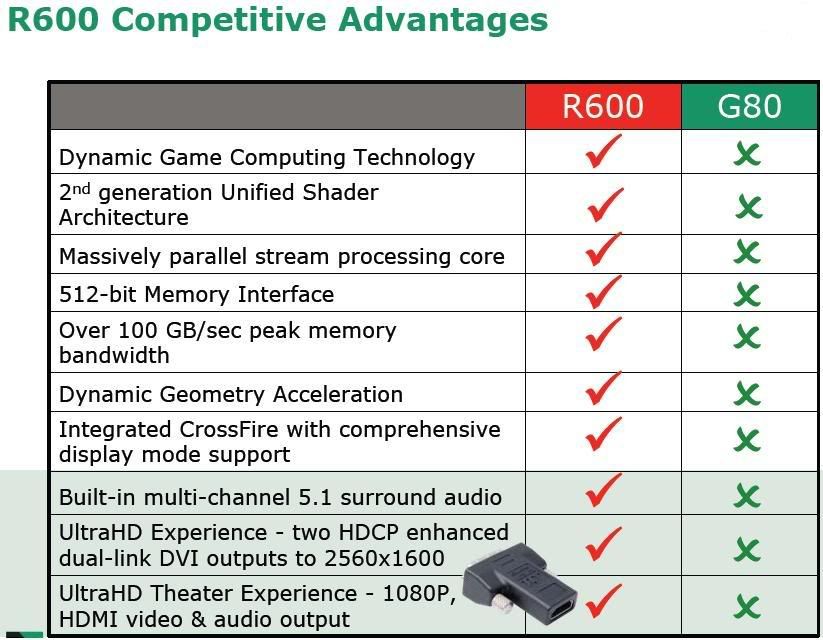

At the top of the DirectX 10 chain, is the ATI Radeon HD 2900 XT. The AMD ATI Radeon HD 2900-series features 320 stream processors, over twice as many as NVIDIA's GeForce 8800 GTX. AMD couples the 320 stream processors with a 512-bit memory interface with eight channels. CrossFire support is now natively supported by the AMD ATI Radeon HD 2900-series; the external CrossFire dongle is a thing of the past.

The R600-based ATI Radeon HD 2900-series products also support 128-bit HDR rendering. AMD has also upped the ante on anti-aliasing support. The ATI Radeon HD 2900-series supports up to 24x anti-aliasing. NVIDIA's GeForce 8800-series only supports up to 16x anti-aliasing. AMD's ATI Radeon HD 2900-series also possesses physics processing.

New to the ATI Radeon HD 2900-series are integrated HDMI output capabilities with 5.1 surround sound. However, early images of AMD's OEM R600 reveal dual dual-link DVI outputs, rendering the audio functions useless.

AMD's RV630-based products will carry the ATI Radeon HD 2600 moniker with Pro and XT models. The value-targeted RV610-based products will carry the ATI Radeon HD 2400 name with Pro and XT models as well.

The entire AMD ATI Radeon HD 2000-family features the latest Avivo HD technology. AMD's upgraded Avivo with a new Universal Video Decoder, also known as UVD, and the new Advanced Video Processor, or AVP. UVD previously made its debut in the OEM-exclusive RV550 GPU core. UVD provides hardware acceleration of H.264 and VC-1 high definition video formats used by Blu-ray and HD DVD. The AVP allows the GPU to apply hardware acceleration and video processing functions while keeping power consumption low.

Expect AMD to launch the ATI Radeon HD 2000-family in the upcoming weeks, if AMD doesn't push back the launch dates further.

http://hardware.gotfrag.com/portal/story/37293/Via DailyTech: "AMD has named the rest of its upcoming ATI Radeon DirectX 10 product lineup. The new DirectX 10 product family received the ATI Radeon HD 2000-series moniker. For the new product generation, AMD has tagged HD to the product name to designate the entire lineup's Avivo HD technology. AMD has also removed the X-prefix on its product models. At the top of the DirectX 10 chain, is the ATI Radeon HD 2900 XT. The AMD ATI Radeon HD 2900-series features 320 stream processors, over twice as many as NVIDIA's GeForce 8800 GTX. AMD couples the 320 stream processors with a 512-bit memory interface with eight channels. CrossFire support is now natively supported by the AMD ATI Radeon HD 2900-series; the external CrossFire dongle is a thing of the past.

The R600-based ATI Radeon HD 2900-series products also support 128-bit HDR rendering. AMD has also upped the ante on anti-aliasing support. The ATI Radeon HD 2900-series supports up to 24x anti-aliasing. NVIDIA's GeForce 8800-series only supports up to 16x anti-aliasing. AMD's ATI Radeon HD 2900-series also possesses physics processing."

Mar 1 2007, 11:34 AM, updated 19y ago

Mar 1 2007, 11:34 AM, updated 19y ago

Quote

Quote

0.0847sec

0.0847sec

0.42

0.42

6 queries

6 queries

GZIP Disabled

GZIP Disabled