QUOTE(Jazted @ Feb 12 2025, 07:31 PM)

Can copy the full articles as this one needs to pay

QUOTE

Innovative parallel computing design using domestic hardware underscores Beijing's broader strategy to blunt 'chokepoint' risks in critical tech

Computer researchers in China using domestically made graphics processors have achieved a near-tenfold boost in performance over powerful US supercomputers that rely on Nvidia's cutting-edge hardware, according to a peer-reviewed study.

The accomplishment points to possible unintended consequences of Washington's escalating tech sanctions while challenging the dominance of American-made chips, long considered vital for advanced scientific research.

The researchers said that innovative software optimisation techniques enabled them to improve efficiency gains in computers powered by Chinese-designed graphics processing units (GPUs) to outperform US supercomputers in certain scientific computations.

Do you have questions about the biggest topics and trends from around the world? Get the answers with SCMP Knowledge, our new platform of curated content with explainers, FAQs, analyses and infographics brought to you by our award-winning team.

While sceptics caution that software tweaks alone cannot bridge hardware gaps indefinitely, the development underscores Beijing's broader strategy to mitigate "chokepoint" risks in critical technologies.

Scientists often rely on simulations to model real-world circumstances, such as designs to defend against flooding or urban waterlogging. But such reproductions, especially large scale, high-resolution simulations, demand substantial time and computational resources, limiting the broader application of such an approach.

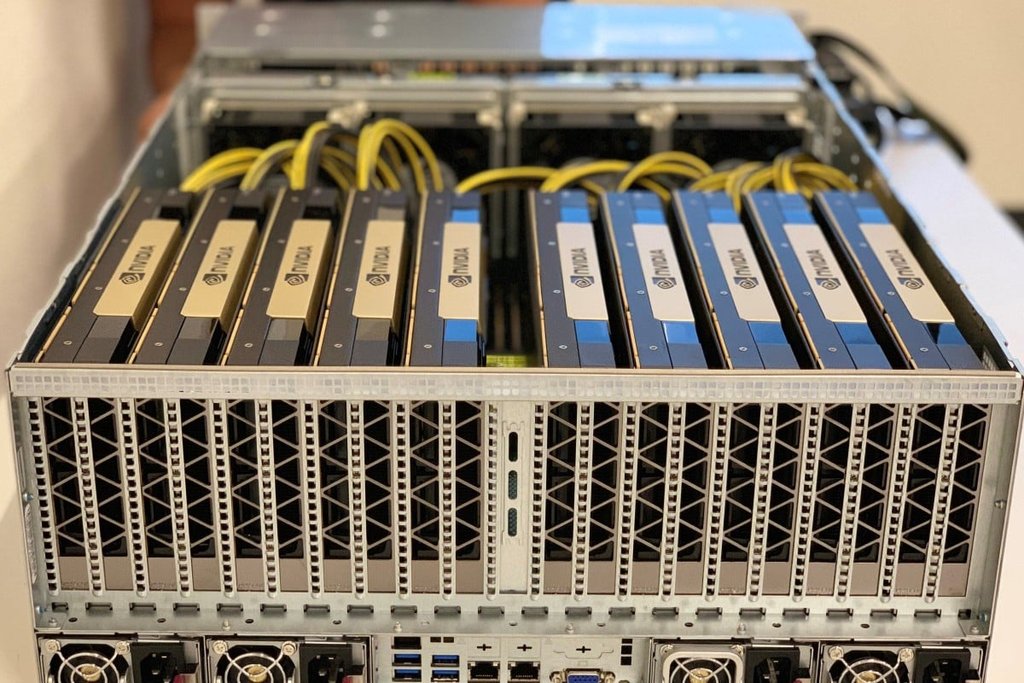

The challenge for Chinese scientists is even more daunting. For hardware, production of advanced GPUs like the A100 and H100 are dominated by foreign manufacturers. On the software side, US-based Nvidia has restricted its CUDA software ecosystem from running on third-party hardware, thus hindering the development of independent algorithms.

In search of a breakthrough, Professor Nan Tongchao with the State Key Laboratory of Hydrology-Water Resources and Hydraulic Engineering at Hohai University in Nanjing, began exploring a "multi-node, multi-GPU" parallel computing approach based on domestic CPUs and GPUs. The results of their research were published in the Chinese Journal of Hydraulic Engineering on January 3.

The key to successful parallel computing is efficient data transfer and task coordination between multiple nodes, thereby minimising performance loss.

In 2021, Mario Morales-Hernandez and his research team at Oak Ridge National Laboratory in the US introduced a "multi-node, multi-GPU" flood forecasting model, known as TRITON, using the Summit supercomputer. Despite using 64 nodes, however, the "speedup" - or increased processing speed - was only around six times.

Nan proposed a new architecture that combined multiple GPUs into a single node, to compensate for the performance limitations of domestic CPUs and GPUs. At the same time, he improved data exchanges between nodes at the software level to reduce the communication overhead between them.

According to the paper, the model was set up on a domestic general-purpose x86 computing platform. The CPUs used were domestic Hygon processors, model number 7185 - with 32 cores, 64 threads, and 2.5 GHz clock speed. The GPUs were also domestic, supported by 128GB of memory and a network bandwidth of 200 Gb/s.

The new model reached a speedup of six with only seven nodes - about 89 per cent fewer than the number used by TRITON.

To validate the model's effectiveness and assess its computational efficiency, Nan's team chose the Zhuangli Reservoir in Zaozhuang, in east China's Shandong province, as the simulation subject.

Using 200 computational nodes and 800 GPUs, the model simulated the flood evolution process in just three minutes, achieving a speedup of over 160 times - far surpassing that of the TRITON model.

"Simulating floods at a river basin scale in just minutes means real-time simulations of flood evolution and various rainfall-run-off scenarios can now be conducted more quickly and in greater detail. This can enhance flood control and disaster prevention efforts, improve real-time reservoir management, and ultimately reduce loss of life and property," Nan said in the paper.

The code for the research is available on an open-source website. Nan added that the findings could be applied not just to flood modelling but also to simulations for complex systems in fields like hydrometeorology, sedimentation, and surface-water-groundwater interactions.

"Future work will expand its applications and further test its stability in engineering practices," he added.

More Articles from SCMP

Feb 12 2025, 06:14 PM

Feb 12 2025, 06:14 PM

Quote

Quote

0.0152sec

0.0152sec

0.66

0.66

5 queries

5 queries

GZIP Disabled

GZIP Disabled