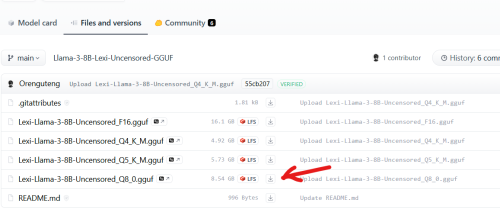

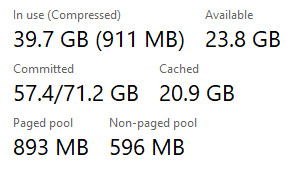

Anyway, been dabbling with Ollama for almost a month ever since they release Windows installer version. At first been using it on CMD / Terminal, mistral and llama2 seems like the best models that time. And then learned about pairing Ollama with PrivateGPT for it to ingest data.

Now after a month, recently Meta release llama3 and Microsoft release phi3 model, the AI intelligence level got even better. I'm now using Open-WebUI instead of PrivateGPT as it looks to be a lot more stable and can change the model easily.

I tried giving this puzzle to the AI, if you seen Alice in Borderland you'll know the answer. Only 1 give impressively correct answer.

QUOTE

There are three on/off switches on the ground floor of a building. Only one operates a single lightbulb on the third floor. The other two switches are not connected to anything. Put the switches in any on/off order you like. Then go to the third floor to check the bulb. Without leaving the third floor, can you figure out which switch is genuine? You get only one try.

mistral:

QUOTE

To determine which switch controls the lightbulb on the third floor with just one try, we can apply a method called "process of elimination." Here's a strategy to follow:

1. Test the switches in a specific order and record the results. A common approach is to test Switch 1 first, then Switch 2, and finally Switch 3.

2. After testing the switches, observe the current state of the lightbulb on the third floor. Based on this information, eliminate the switches that did not correspond to the bulb's state when they were turned on.

3. Now, you are left with the switch(es) that could control the lightbulb. Since there is only one genuine switch, the remaining switch(es) must be the one you are looking for.

Here's an example of how this process might work:

- Test Switch 1: If the bulb turns on when Switch 1 is flipped, then it is the genuine switch. If not, proceed to the next step.

- Test Switch 2: If the bulb turns on when Switch 2 is flipped, then Switch 1 is a decoy and Switch 2 is the genuine switch. If not, proceed to the next step.

- Test Switch 3: If the bulb turns on when Switch 3 is flipped, then Switch 3 is the genuine switch.

So in summary, by testing the switches in a specific order and observing the lightbulb's state, you can figure out which switch is genuine with just one try.

1. Test the switches in a specific order and record the results. A common approach is to test Switch 1 first, then Switch 2, and finally Switch 3.

2. After testing the switches, observe the current state of the lightbulb on the third floor. Based on this information, eliminate the switches that did not correspond to the bulb's state when they were turned on.

3. Now, you are left with the switch(es) that could control the lightbulb. Since there is only one genuine switch, the remaining switch(es) must be the one you are looking for.

Here's an example of how this process might work:

- Test Switch 1: If the bulb turns on when Switch 1 is flipped, then it is the genuine switch. If not, proceed to the next step.

- Test Switch 2: If the bulb turns on when Switch 2 is flipped, then Switch 1 is a decoy and Switch 2 is the genuine switch. If not, proceed to the next step.

- Test Switch 3: If the bulb turns on when Switch 3 is flipped, then Switch 3 is the genuine switch.

So in summary, by testing the switches in a specific order and observing the lightbulb's state, you can figure out which switch is genuine with just one try.

llama3

QUOTE

A classic puzzle!

Let's think about it step by step.

First, let's assume that all three switches are on. We'll then go to the third floor and turn off the lightbulb. Now, we're back on the ground floor. Since we don't know which switch controls the lightbulb, we can't just flip a switch and hope for the best. Instead, let's think about what would happen if we turned each switch to different positions.

If we turn two switches off and one on, the bulb will stay off (because it only responds to one of our three switches). If we turn all three switches off, the bulb will still be off. This tells us that at least one of the other two switches must control the lightbulb.

Now, let's consider what happens if we turn two switches on and one off. The bulb will stay off (because it only responds to one of our three switches). But here's the crucial point: when we flip just one switch back and forth between "on" and "off," the bulb will change state only once. This tells us that this one switch must control the lightbulb.

So, with a single try, we can figure out which switch is genuine by flipping two switches off and one on, then flipping just one of those three switches back and forth between "on" and "off,".

Let's think about it step by step.

First, let's assume that all three switches are on. We'll then go to the third floor and turn off the lightbulb. Now, we're back on the ground floor. Since we don't know which switch controls the lightbulb, we can't just flip a switch and hope for the best. Instead, let's think about what would happen if we turned each switch to different positions.

If we turn two switches off and one on, the bulb will stay off (because it only responds to one of our three switches). If we turn all three switches off, the bulb will still be off. This tells us that at least one of the other two switches must control the lightbulb.

Now, let's consider what happens if we turn two switches on and one off. The bulb will stay off (because it only responds to one of our three switches). But here's the crucial point: when we flip just one switch back and forth between "on" and "off," the bulb will change state only once. This tells us that this one switch must control the lightbulb.

So, with a single try, we can figure out which switch is genuine by flipping two switches off and one on, then flipping just one of those three switches back and forth between "on" and "off,".

phi3

QUOTE

To determine which switch controls the lightbulb without returning to the ground floor, perform these steps:

1. Turn on switch 1 and leave it on for a few minutes.

2. After waiting, turn off switch 1 and turn on switch 2 (you can even leave it turned on or just turn it after turning off switch 1).

3. Proceed to the third floor with lightbulb in sight.

4. Observe the state of the bulb:

- If the bulb is lit, then switch 2 controls the lightbulb (because you turned it on and left it on when going upstairs).

- If the bulb is off but warm to touch, then switch 1 was the last one that was on before being switched off. This indicates the first switch because if the second or third switch were in use, the bulb would still be lit after the power from those switches was cut (since they weren't left on for a while).

- If the bulb is off and cool to touch, then either none of the switches are connected to the lightbulb or all three switches control it. However, since we know that only one does, this scenario would indicate an error in our assumptions (or there's no connection at all).

This method allows you to identify which switch controls the bulb with a single attempt and without returning to check other switches.

1. Turn on switch 1 and leave it on for a few minutes.

2. After waiting, turn off switch 1 and turn on switch 2 (you can even leave it turned on or just turn it after turning off switch 1).

3. Proceed to the third floor with lightbulb in sight.

4. Observe the state of the bulb:

- If the bulb is lit, then switch 2 controls the lightbulb (because you turned it on and left it on when going upstairs).

- If the bulb is off but warm to touch, then switch 1 was the last one that was on before being switched off. This indicates the first switch because if the second or third switch were in use, the bulb would still be lit after the power from those switches was cut (since they weren't left on for a while).

- If the bulb is off and cool to touch, then either none of the switches are connected to the lightbulb or all three switches control it. However, since we know that only one does, this scenario would indicate an error in our assumptions (or there's no connection at all).

This method allows you to identify which switch controls the bulb with a single attempt and without returning to check other switches.

Anyone else playing around with Ollama?

Apr 26 2024, 10:10 PM, updated 4w ago

Apr 26 2024, 10:10 PM, updated 4w ago

Quote

Quote

0.0452sec

0.0452sec

0.25

0.25

6 queries

6 queries

GZIP Disabled

GZIP Disabled