3DMark Time Spy DX12 Benchmark came out a few days ago. The results and analysis has been pouring in the net. It also spurred quite a lot of discussion here, both in AMD and Nvidia threads, especially those still using or planning to buy 2nd hand past generation GPUs (Fiji, Maxwell etc) and also those who already bought or planning to buy the new AMD Polaris and Pascal GPUs.

I saw quite a number of user asking this question "Will my CPU bottleneck the new GPU I bought/ planning to buy?". Some still using i5-750 right up to the new i5-6600 Skylake and models in between (have not seen and I don't think there will be anyone with i7-6700K asking this question). I don't have any answer to this question.

But it got me thinking, I already know that overall score is dependent on physics test score (if the GPU is the same), which in turn depends on how powerful your CPU is. But does the CPU performance has any effect on the Graphic test result? So that's what I thought I wanted to test and share here. I hope this topic does not turn into thread to discuss which brand or model is better than the other. Even though I have Nvidia GPU now, I want to see this as a healthy discussion and I hope anyone with ideas or opinions can chip in, no matter if you use AMD, Nvidia or Intel products user

The method of my test was to change the number of my CPU active cores through BIOS and cycle it through the Time Spy bench. Brief details of my setup and methods

My PC spec:

- Intel i7-4930K 6 Core/ 12 Threads Ivy Bridge OC to 4.6Ghz across all cores. Hypertrading, Intel Turbo/ Speedstep enabled. Enermax ELC240 AIO Liquid CPU Cooler.

- Asus X79 Deluxe Mobo, 2 x 8GB Corsair Vengence DDR3 RAM OC 2133 MHz, Adata SP 900 256 GB SSD, Gigabyte GTX 1080 G1 Gaming GPU and Samsung 28" UHD 3840 x 2160 Monitor (through display port), Windows 10 Pro 64-Bit and latest Nvidia 368.81 driver

Test Method

- I use BIOS CPU Settings to change the "Active Core" parameter to 2, 4 or All (6) cores. Other setting remained unchanged.

- After each CPU core change and Windows boot, I will OC the GPU using Afterburner: +140 Mhz clock, + 190 Mhz memory, power limit 108% and temp limit 92C.

- I used HWMonitor to see CPU utilization and will only start the Time Spy bench after the CPU has idled (to minimize or avoid any apps that starts with windows boot from effecting the test run).

- Afterburner and HWMonitor closed before running each bench. After each Time Spy run, I saved the result locally to my PC and also validate online.

- I used 3DMark online compare to put the results of side by side for easy comparison, and the local PC saved file to view details including Monitoring Graphs

So here the results

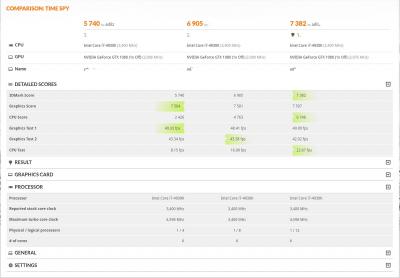

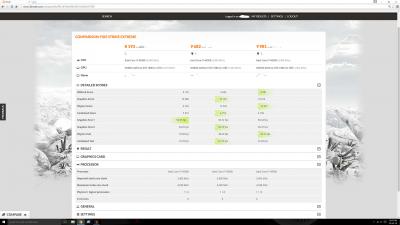

3DMark Time Spy Default Bench Test Comparison

From left to right: 2C/4T, 4C/8T and 6C/12T

Firstly, please ignore maximum CPU turbo clock for 4C/8T run shown as only 3.4 Ghz. Had this problem since Firestrike time that sometimes 3DMark run did not show the correct turbo clock. Also, 3DMark showed # of core as 6 for all 3, but its the logical processor (4, 8 and 12 threads) indicates active CPU cores.

As I have expected, the overall scores scales with the CPU core/ thread count. But as you can see, regardless running 2, 4 or 6 cores on the CPU, there very little variance in Graphics Test 1 and Test 2 score or fps. The physics score and fps scaled linear as it went from 2-4-6 cores. So only the physics score contributed to overall score variance.

The default Time Spy bench runs on 1440P, so I thought will it change if I bump it up to 4K

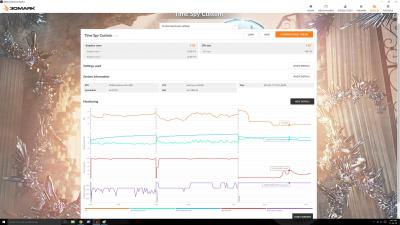

3DMark Time Spy Custom 4K Bench Test Comparison

From left to right: 2C/4T, 4C/8T and 6C/12T.

To test 4K res, I had to run Time Spy in custom mode, which does not give any overall score. Anyway, again, very little variance in Graphic test 1 and 2 score/ fps, physics score scaled with CPU core count.

So looking at both result (and this is where I hope you guys can chip in your opinions because I don't know if my opion is right or not), this was my initial thought totally based on this results only; regardless if you have Pentium Dual Core, i5 or i7, the graphics performance remains the same. Only the overall score defers based on how powerful your CPU is. Nothing unexpected and kinda boring.

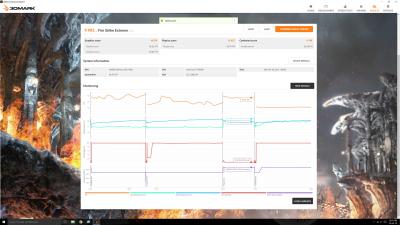

But when I look at the detailed results and CPU/ GPU monitoring graphs(from 3DMark app), that's where it got interesting. This result is from the same Custom 4K run.

» Click to show Spoiler - click again to hide... «

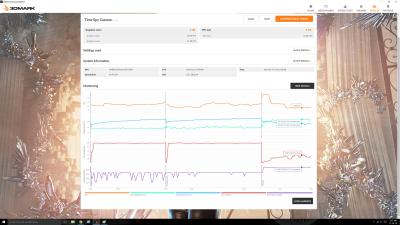

Left to right pictures: 2 Core, 4 Core and 6 Cores Time Spy Custom 4K run results and monitoring graphs.

Comparing the result under the different test category

Graphic Test 1

While GPU was fully loaded, this test put little stress on the CPU. Regardless if its 2, 4 or 6 cores, the CPU speed only fluctuated between 1.2 Ghz to max 3.4 Ghz. However, you might notice that 2C graphs spent more time at 3.4 Ghz compared to 4 and 6 core test. Looked like non of the CPU went into Turbo

Graphic Test 2

Graphics test 2 now began to take effect on CPU. The 2C run CPU speed fluctuated between 2.6 Ghz and Turbo 4.6 Ghz now. Evethough 4C and 6C still didn't go above 3.4 Ghz, you will notice 4C spent a lot more time touching 3.4 GHz compared to 6C. Because the graphic score and fps doesn't vary much across the 3 test, my take is that, the GPU now requires the CPU with lesser core count to work harder to deliver the same level of performance as the higher core-count more powerful CPU.

Physics Test

This is the most interesting. The physics test fps result scaled with CPU core count with all the CPUs maxing out 4.6 Ghz. We look at the fps at period roughly 3/4 way through each test run. 2-Cores:7.72 fps, 4-Cores:15.65 fps and 6-Cores:23.19 fps.

But look at them GPU load. For 2-Core CPU, GPU was sweating at 40% load to deliver 7.7 fps. Whereas the 6-Core CPU almost didn't needed the GPU. A very walk in the park 6% load to deliver 23fps.

I thought, if the Physics test is only to stress the CPU, shouldn't the GPU load remained the same regardless of CPU performance? Is this an indication of CPU Bottlenecking or something else?

What do you guys think?

This post has been edited by adilz: Jul 20 2016, 11:42 PM

Jul 17 2016, 05:12 AM, updated 10y ago

Jul 17 2016, 05:12 AM, updated 10y ago

Quote

Quote

0.0174sec

0.0174sec

0.41

0.41

6 queries

6 queries

GZIP Disabled

GZIP Disabled