Outline ·

[ Standard ] ·

Linear+

nvidia gpu doesnt handle async compute well, gimpwork again?

nvidia gpu doesnt handle async compute well, gimpwork again?

|

TSzerorating

|

Feb 25 2016, 09:40 AM, updated 10y ago Feb 25 2016, 09:40 AM, updated 10y ago

|

|

wadefak nvidia, what are you doing, maxwell gpu are actually lose performance when async compute were used. Those are killer dx12 feature for gaining more performance from the gpu for creating better visual (by making shaders engines less time to idle when handling heavy graphic task) no wonder their gpu so efficient on current dx11 title, they dont make their gpu future-proof (simpler design that having really high clock speed) in few year, ps4 might have better visual vs gtx960 on the future game, maxwell based graphic card might be legacy product next year (they already did with exclusive game like order 1886 and future game like uncharted 4 or ratchet and clank) nvidia: You want great dx12 performance, buy a new card.     http://www.extremetech.com/gaming/223567-a...tx-12-benchmarkThis post has been edited by zerorating: Feb 25 2016, 10:16 AM http://www.extremetech.com/gaming/223567-a...tx-12-benchmarkThis post has been edited by zerorating: Feb 25 2016, 10:16 AM |

|

|

|

|

|

SUStlts

|

Feb 25 2016, 09:49 AM Feb 25 2016, 09:49 AM

|

|

thats y i support amd for so many years

|

|

|

|

|

|

skinny-dipper

|

Feb 25 2016, 09:50 AM Feb 25 2016, 09:50 AM

|

Getting Started

|

2016/2017 gonna be AMD year

|

|

|

|

|

|

ruffstuff

|

Feb 25 2016, 09:52 AM Feb 25 2016, 09:52 AM

|

|

Who got played Ashed of Singularity?

|

|

|

|

|

|

SUScrash123

|

Feb 25 2016, 09:53 AM Feb 25 2016, 09:53 AM

|

Getting Started

|

Dx12 need at least 3-4 years for widely use in all game. Do u thonk dx 11 out then next year all game use dx11?

|

|

|

|

|

|

SUSIMORTALfatahL2401

|

Feb 25 2016, 09:56 AM Feb 25 2016, 09:56 AM

|

New Member

|

QUOTE(zerorating @ Feb 25 2016, 09:40 AM) wadefak nvidia, what are you doing, maxwell gpu are actually lose performance when async compute were used. Those are killer dx12 feature for gaining more performance from the gpu for creating better visual (by making shaders engines less time to idle when handling heavy graphic task) no wonder their gpu so efficient on current dx11 title, they dont make their gpu future-proof (simpler design that having really high clock speed) in few year, ps4 might have better visual vs gtx960 on the future game, maxwell based graphic card might be legacy product next year (they already did when exclusive game like order 1886 and future game like uncharted 4 or ratchet and clank) nvidia: You want great dx12 performance, buy a new card.     http://www.extremetech.com/gaming/223567-a...tx-12-benchmark http://www.extremetech.com/gaming/223567-a...tx-12-benchmarkblame it on amd, their slow development make nvidia don't feel competition and made shit product for consumer |

|

|

|

|

|

M4YH3M

|

Feb 25 2016, 09:59 AM Feb 25 2016, 09:59 AM

|

Getting Started

|

So nvidia older cards performance is slower when Async compute is turned on? Is it because of software limitation or its architecture does not support those DX12 features?

I believe AMD cards with GCN architecture supporting the DX12 features. Just wanna know if its because they have the software support or built the architecture to support it before DX12 was even out.. anybody?

|

|

|

|

|

|

TSzerorating

|

Feb 25 2016, 10:01 AM Feb 25 2016, 10:01 AM

|

|

QUOTE(M4YH3M @ Feb 25 2016, 09:59 AM) So nvidia older cards performance is slower when Async compute is turned on? Is it because of software limitation or its architecture does not support those DX12 features? I believe AMD cards with GCN architecture supporting the DX12 features. Just wanna know if its because they have the software support or built the architecture to support it before DX12 was even out.. anybody? async compute is supported with software implementation,meaning queuing the shaders task serially (not parallel) not actual hardware implementation |

|

|

|

|

|

TSzerorating

|

Feb 25 2016, 10:03 AM Feb 25 2016, 10:03 AM

|

|

QUOTE(nyess @ Feb 25 2016, 09:55 AM) >implying pascal won't fix that >assuming maxwell won't get updated >assuming amd zen will actually deliver 40% IPC increase tfw neither does amd have FULL dx12 supportas long as major feature are hardware supported, minor feature can be brute-force or software emulated, it doesn't matter much |

|

|

|

|

|

ALeUNe

|

Feb 25 2016, 10:09 AM Feb 25 2016, 10:09 AM

|

I'm the purebred with aristocratic pedigree

|

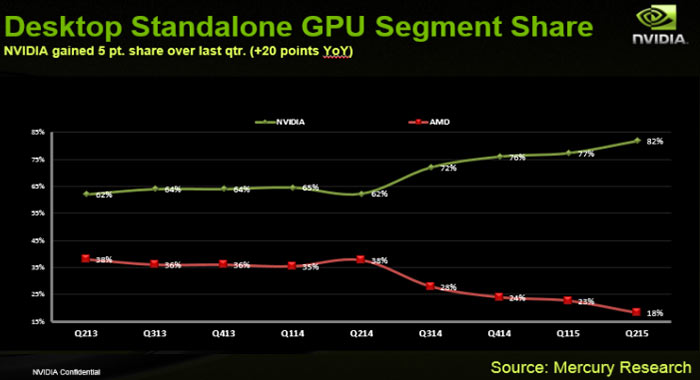

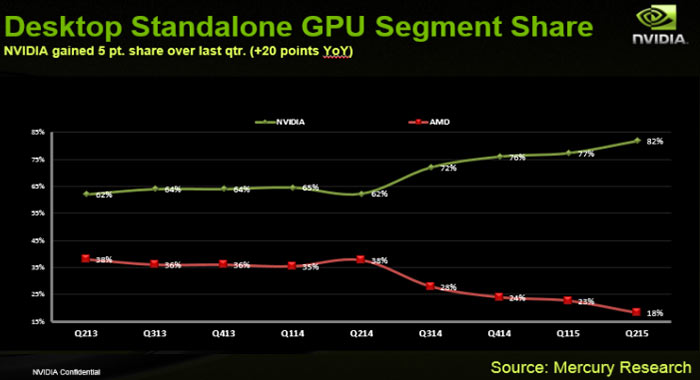

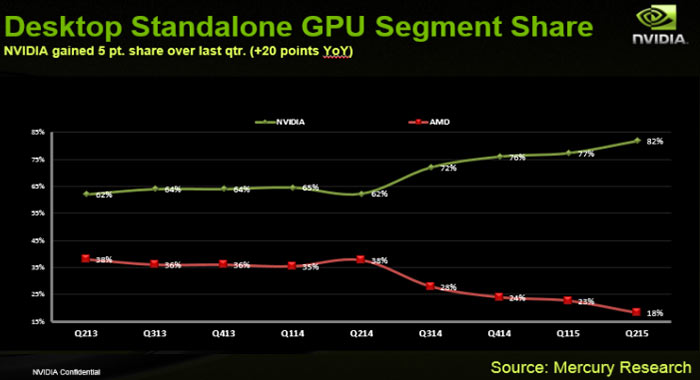

Kesian the low class. Market doesn't lie.  P/S BTW, how many DX12 titles tested? This post has been edited by ALeUNe: Feb 25 2016, 10:09 AM |

|

|

|

|

|

TSzerorating

|

Feb 25 2016, 10:11 AM Feb 25 2016, 10:11 AM

|

|

QUOTE(crash123 @ Feb 25 2016, 09:53 AM) Dx12 need at least 3-4 years for widely use in all game. Do u thonk dx 11 out then next year all game use dx11? rise of tomb raider engine are dx12 ready, but nvidia suggested S.E not to push it for public usage. hitman, deus ex, fable legend gonna be dx12 on release, all of them supported async-compute. |

|

|

|

|

|

alfiejr

|

Feb 25 2016, 10:18 AM Feb 25 2016, 10:18 AM

|

|

Will wait for pascal.

|

|

|

|

|

|

TSzerorating

|

Feb 25 2016, 10:19 AM Feb 25 2016, 10:19 AM

|

|

QUOTE(ALeUNe @ Feb 25 2016, 10:09 AM) Kesian the low class. Market doesn't lie.  P/S BTW, how many DX12 titles tested? it just because amd didnt have a new product during maxwell launch. With all the gameworks scandals and amd going to be early for polaris (they even demo their low-end gpu), they will gain a little bit of marketshare |

|

|

|

|

|

SUScrash123

|

Feb 25 2016, 10:19 AM Feb 25 2016, 10:19 AM

|

Getting Started

|

QUOTE(zerorating @ Feb 25 2016, 10:11 AM) rise of tomb raider engine are dx12 ready, but nvidia suggested S.E not to push it for public usage. hitman, deus ex, fable legend gonna be dx12 on release, all of them supported async-compute. Dx12 does not only use asyn computrl. They are other methods to deliver dx12 |

|

|

|

|

|

TSzerorating

|

Feb 25 2016, 10:20 AM Feb 25 2016, 10:20 AM

|

|

QUOTE(alfiejr @ Feb 25 2016, 10:18 AM) how about current product? can throw to dustbin? disappointed for me as gtx760 owner, now already becoming one of lowest tier in latest game. |

|

|

|

|

|

TendouJigoku

|

Feb 25 2016, 10:22 AM Feb 25 2016, 10:22 AM

|

New Member

|

I'll just chill out with my GTX970 while waiting for Pascal.

|

|

|

|

|

|

TSzerorating

|

Feb 25 2016, 10:23 AM Feb 25 2016, 10:23 AM

|

|

QUOTE(crash123 @ Feb 25 2016, 10:19 AM) Dx12 does not only use asyn computrl. They are other methods to deliver dx12 its a great feature to get more performance from the gpu, why developers holding from using it? when a games perform well, less complaint by the consumer or you can make better visual on the game.it work well with console. rise of tomb raider on xbox one are heavily depended on async compute,help makes great visual on the low spec console. This post has been edited by zerorating: Feb 25 2016, 10:27 AM |

|

|

|

|

|

TSzerorating

|

Feb 25 2016, 10:31 AM Feb 25 2016, 10:31 AM

|

|

QUOTE(nyess @ Feb 25 2016, 10:28 AM) bro this is SO old la i suggest you use search function OR google to learn more about it its a yesterday article la, as far that i know for the last ashes build, async compute were not enabled for nvidia gpu (now we know why, because it run slower) |

|

|

|

|

|

SUScrash123

|

Feb 25 2016, 10:32 AM Feb 25 2016, 10:32 AM

|

Getting Started

|

QUOTE(zerorating @ Feb 25 2016, 10:23 AM) its a great feature to get more performance from the gpu, why developers holding from using it? when a games perform well, less complaint by the consumer or you can make better visual on the game.it work well with console. rise of tomb raider on xbox one are heavily depended on async compute,help makes great visual on the low spec console. Who holding off?nixxes already said they will give a patch for dx12. Why they still working on it?maybe not stable. And u said nvidia dont give nixxes to release dx12 for tomb raider is just bullshit and conspiracy statement |

|

|

|

|

|

TSzerorating

|

Feb 25 2016, 10:34 AM Feb 25 2016, 10:34 AM

|

|

QUOTE(crash123 @ Feb 25 2016, 10:32 AM) Who holding off?nixxes already said they will give a patch for dx12. Why they still working on it?maybe not stable. And u said nvidia dont give nixxes to release dx12 for tomb raider is just bullshit and conspiracy statement hint: nvidia contract with S.E, its an nvidia game they will release the patch when the conracts end. |

|

|

|

|

|

Quantum Geist

|

Feb 25 2016, 10:36 AM Feb 25 2016, 10:36 AM

|

Getting Started

|

AMD has async modules, whilst nvidia doesn't in current generation (simulated through software instead). If I'm not mistaken a few PS4 and XBone developers starting to implement async.

Wait for Polaris and/or Pascal. But Pascal maybe a bit slower to release since there are rumors that they have trouble in production.

|

|

|

|

|

|

RevanChrist

|

Feb 25 2016, 10:38 AM Feb 25 2016, 10:38 AM

|

New Member

|

Doesn't matter. Going to jump ship to Polaris/Pascal. Maxwell cuts too many corners for power consumption optimization. Hawaii too power hungry.

|

|

|

|

|

|

SUScrash123

|

Feb 25 2016, 10:38 AM Feb 25 2016, 10:38 AM

|

Getting Started

|

QUOTE(zerorating @ Feb 25 2016, 10:34 AM) hint: nvidia contract with S.E, its an nvidia game they will release the patch when the conracts end. Got any proof? |

|

|

|

|

|

TSzerorating

|

Feb 25 2016, 10:41 AM Feb 25 2016, 10:41 AM

|

|

QUOTE(crash123 @ Feb 25 2016, 10:38 AM) do you even heard of non-disclosure agreement, it will hurt both company if they tell public about it. do you want S.E to tell: we intentionally sabotage AMD, because we want to? and why are you so defensive? we shouldn't support company that try hard to holding back new technology that actually helps. This post has been edited by zerorating: Feb 25 2016, 10:45 AM |

|

|

|

|

|

unknown_2

|

Feb 25 2016, 10:49 AM Feb 25 2016, 10:49 AM

|

|

QUOTE(zerorating @ Feb 25 2016, 10:20 AM) how about current product? can throw to dustbin? disappointed for me as gtx760 owner, now already becoming one of lowest tier in latest game. nvidia is like a hot girl, but the catch is she aged terribly. AMD is that ok looking girl wit internal beauty. choose ur girl. |

|

|

|

|

|

TSzerorating

|

Feb 25 2016, 10:49 AM Feb 25 2016, 10:49 AM

|

|

QUOTE(nyess @ Feb 25 2016, 10:47 AM) the article is about the new update for the game, now with async compute your tered is about how nvidia hurr durr gimped and does not handle async compute well slowpoke la, if you already know about the issue first addressed almost half a year ago, you mana bukak tered mcm slowpoke mia everybody knows oledi you'd just read the article and keep the thoughts on yourself mana sampai bukak tered "eh eh nvidia hurr gimpwork again durr?" we know that nvidia publish that their product do support async compute (even by software), but we didnt heard that it will lose in performance. |

|

|

|

|

|

SUScrash123

|

Feb 25 2016, 10:51 AM Feb 25 2016, 10:51 AM

|

Getting Started

|

QUOTE(zerorating @ Feb 25 2016, 10:41 AM) do you even heard of non-disclosure agreement, it will hurt both company if they tell public about it. and why are you so defensive? There we go with conspiracy theory. Why im so defensive?coz people spewing bullshit without proof. Just bcoz one game that use dx12 and nvidia suck at it, lets assume that they will suck at all dx12 game. Wait until other game then we can make a conclusion. Im not a nvidia fan. U will see in my post i will recommended r9 390/390x over gtx 970/980. Gtx 980ti over fury x and fury x cf over sli gtx 980ti. Inb4 nvidia fag Inb4 nvidia butthurt |

|

|

|

|

|

TSzerorating

|

Feb 25 2016, 11:04 AM Feb 25 2016, 11:04 AM

|

|

QUOTE(crash123 @ Feb 25 2016, 10:51 AM) There we go with conspiracy theory. Why im so defensive?coz people spewing bullshit without proof. Just bcoz one game that use dx12 and nvidia suck at it, lets assume that they will suck at all dx12 game. Wait until other game then we can make a conclusion. Im not a nvidia fan. U will see in my post i will recommended r9 390/390x over gtx 970/980. Gtx 980ti over fury x and fury x cf over sli gtx 980ti. Inb4 nvidia fag Inb4 nvidia butthurt its common practice for a company to sabotage other company. But im not agree on ripping off their old customer with old product (making a product legacy just in 1years+) not everyone have rm3000+ to spend for a new graphic card in order for it stay relevant for 3 years. okay we wait for other benchmark with async compute toggle, but i doubt to see it if gpu from both side can support it. This post has been edited by zerorating: Feb 25 2016, 11:12 AM |

|

|

|

|

|

Demon_Eyes_Kyo

|

Feb 25 2016, 11:19 AM Feb 25 2016, 11:19 AM

|

|

QUOTE(zerorating @ Feb 25 2016, 11:04 AM) its common practice for a company to sabotage other company. But im not agree on ripping off their old customer with old product (making a product legacy just in 1years+) not everyone have rm3000+ to spend for a new graphic card in order for it stay relevant for 3 years. okay we wait for other benchmark with async compute toggle, but i doubt to see it if gpu from both side can support it. GTX760? Same card with me  . But thats so 2013 card. Mana 1 year+? |

|

|

|

|

|

TSzerorating

|

Feb 25 2016, 11:23 AM Feb 25 2016, 11:23 AM

|

|

QUOTE(Demon_Eyes_Kyo @ Feb 25 2016, 11:19 AM) GTX760? Same card with me  . But thats so 2013 card. Mana 1 year+? gtx760 may have longer shelf life, bit gtx780ti were release on november 2013, gtx 700 series were becoming legacy product in year 2015. |

|

|

|

|

|

Demon_Eyes_Kyo

|

Feb 25 2016, 01:00 PM Feb 25 2016, 01:00 PM

|

|

Classifying as legacy doesn't meant its written off bro. Just that they reached a state where drivers are pretty stable and doesnt require a lot of changes. I believe the 780Ti is still very much capable of playing many games at pretty good settings nowadays.

Btw not defending nvidia at any point. Just saying once drivers are stable it will be classified as legacy. The same went with my ATI 4890 last time, after like 1.5 to 2 years it gone legacy as well.

|

|

|

|

|

|

deodorant

|

Feb 25 2016, 01:06 PM Feb 25 2016, 01:06 PM

|

|

If thinking of buying comp (looking at upper/mid range like gtx970) issit OK to buy now or wait a bit for upcoming tech?

|

|

|

|

|

|

TSzerorating

|

Feb 25 2016, 01:16 PM Feb 25 2016, 01:16 PM

|

|

QUOTE(Demon_Eyes_Kyo @ Feb 25 2016, 01:00 PM) Classifying as legacy doesn't meant its written off bro. Just that they reached a state where drivers are pretty stable and doesnt require a lot of changes. I believe the 780Ti is still very much capable of playing many games at pretty good settings nowadays. Btw not defending nvidia at any point. Just saying once drivers are stable it will be classified as legacy. The same went with my ATI 4890 last time, after like 1.5 to 2 years it gone legacy as well. my history with graphic card, once the product reached legacy, game optimization will not made anymore, only bugfixes or optimization that can be apply on all product making it even shittier than the recent product that having lower specification. Yes I know game graphic budget could be difference, some game may heavily depending on polygon counts and some other may be heavy on shaders or heavy on post-processing and some of them are heavy dependent on memory throughput and memory size. tldr: doesnt mean gpu that are capable to run quake3 at 5000fps will be having better performance on latest game when comparing with the gpu capable to run quake3 at 1000fps how come gtx760 is weaker than hd7850 for the latest game and they had game ready driver for it.  This post has been edited by zerorating: Feb 25 2016, 01:22 PM This post has been edited by zerorating: Feb 25 2016, 01:22 PM |

|

|

|

|

|

dante1989

|

Feb 25 2016, 01:20 PM Feb 25 2016, 01:20 PM

|

Getting Started

|

ayyyy gtx 760 owner here too

|

|

|

|

|

|

jamilselamat

|

Feb 25 2016, 01:20 PM Feb 25 2016, 01:20 PM

|

Getting Started

|

QUOTE(nyess @ Feb 25 2016, 09:51 AM) you baru tau ke? pipul ady know since more than 6 months + ago This. It was the source of dispute between Ashes developer and NVIDIA marketing team. EDIT: I made a thread about it 6 months ago. https://forum.lowyat.net/index.php?showtopic=3696104&hl=This post has been edited by jamilselamat: Feb 25 2016, 01:25 PM |

|

|

|

|

|

SUStlts

|

Feb 25 2016, 01:20 PM Feb 25 2016, 01:20 PM

|

|

QUOTE(unknown_2 @ Feb 25 2016, 10:49 AM) nvidia is like a hot girl, but the catch is she aged terribly. AMD is that ok looking girl wit internal beauty. choose ur girl. i will fak hot girl then merid internal beauty 1...while still favking hot girl every time |

|

|

|

|

Feb 25 2016, 09:40 AM, updated 10y ago

Feb 25 2016, 09:40 AM, updated 10y ago

Quote

Quote

0.0214sec

0.0214sec

0.63

0.63

5 queries

5 queries

GZIP Disabled

GZIP Disabled