QUOTE(cstkl1 @ Jul 27 2015, 10:27 PM)

haswell benefit

if u want more

faster encoding via the huge dmi bandwidth.

sata 3 native ports

usb 3 ports

skylake benefits

NVME ssd

native usb 3.1 ports ( not sure just assuming at this point)

skylake still deciding should i go nvme. definately no obvious gain other then watching ssd benchmarks.

zero benefit on gaming to be honest.

usb 3.1 seriously is there any device out there thats taking advantage of its direct access to dmi gen 1 speeds??

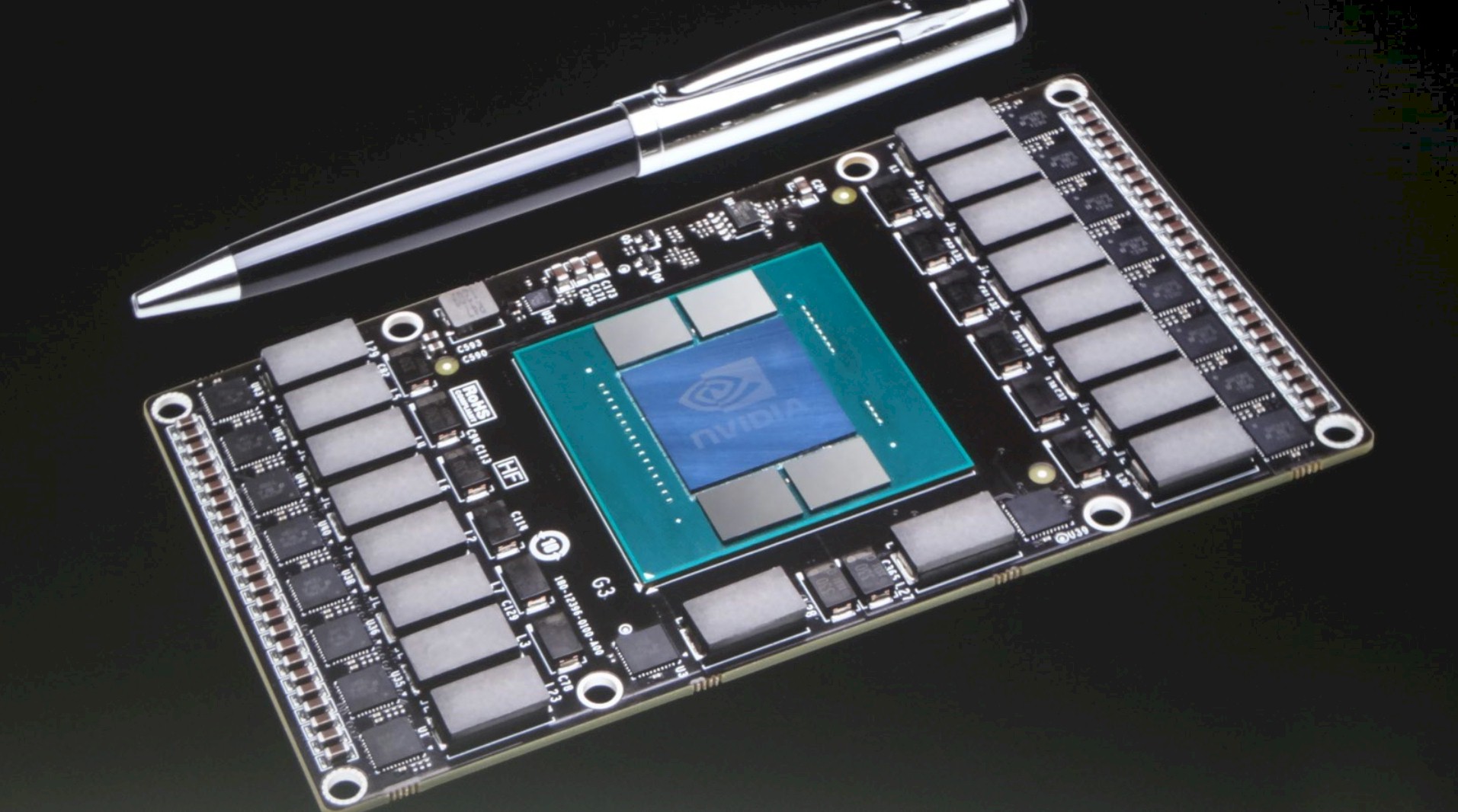

nvlink its not something ure gonna see beneficial on daily commercial gaming setups.

it will how ever open up to a possible octa titan pascal. also with such tech i am pretty sure nvidia has solve some multi gpu scaling here.

also interesting part about nvlink is .. the ability of gpu to access ram and vram of the other gpu directly if i am not mistaken.

the tech is gonna solve current issues with multi gpu.

what is the performance improvement of skylake over ivy bridge ?

do i need an upgrade to skylake to benefit for pascal. if not for compute performance, then for at least a nvlink, but would that make any difference for single gpu setups, or would pcie3 be enough ?

QUOTE(shikimori @ Jul 28 2015, 12:05 AM)

go get yourself a 144hz IPS monitor . You wont regret it man , game that you play feels really buttery smooth without sacrificing colors and viewing angle . I have not experience g-sync or freesync but 144hz is really worth it provided you have a decent gpu

Think of it like having the COD esque movement feeling when playing non COD games even on RTS or games like Diablo 3 .

the thing is, there are a few technologies coming not long after. things i can think of is quantum dot which they would add as a film layer onto monitors for improved color. and who knows, maybe the led back light too would have an upgrade from the now common w-led to something like a gr-r led or better

for even better colors. but if current ips w-led technology is more than sufficient, then yeah the acer predator 144hz gsync ips monitor seems to be the best gaming monitor out atm. the only thing i can critique is the monitor build which has a light reflective plastic frame. but still considering everything else passes scrutiny over at tft central, the few flaws seem worth glossing over

QUOTE(skylinelover @ Jul 28 2015, 12:12 AM)

Cannonlake wait till hair drop wont come out so soon

pulled the trigger from lynfield 2 haswell last february with no regret haha

As 4 pascal, i still can hold out my kepler till Q3 next year if everything went according 2 plan. Being 30s means i no longer able 2 buy gpu every year unlike student days using sugar daddy money. Haha.

P/s : having 1500 per month used 2 be heaven like being student but now salary double that not enough 2 cover myself

probably need quad only enough and dont start talking about after having children with me

That is unless i live malay way of 5 children with low grade milk powder hahahaha i know my 15 yrs longest serving malay supervisor ever with slightly higher salary than me already working in 2 years can support his 5 kids comfortably and maybe with some help from the G perhaps aiks

well kepler was out in 2012, and pascal is out in 2016. so a 4 year upgrade seems due for me. or can possible delay by 1-2 year more for a volta (though i rather not do that). besides there just isn't enough info for what volta has to even warrant waiting. not to mention because volta was delayed, i suspect pascal more or less will be the base of what volta was, but is the product they will rush out first to cover the delay. so i'm placing my bet the performance difference won't be too huge from pascal and volta. besides theres always going to be something better. but i think pascal will be powerful enough to satisfy my pc gaming requirements for a long time

I think pascal is most likely to have a big performance upgrade to warrant upgrading in this point in juncture. i'm not the type of tech enthusiast who upgrades every next year, not rich enough for that

but i can tell you know that kepler 680gtx for me is not enough for current games. i tried ultra settings on dragon age inquistion and it was totally unplayable. had to lower quality settings to medium/high

not playing at 144hz gsync (but instead my paltry 60ghz triple sync vsync mode) is one thing; but not being able to play at ultra settings on a 1080p resolution on a 24'' lcd ips screen is a bit too much for me to ignore

This post has been edited by Moogle Stiltzkin: Jul 28 2015, 01:18 AM

This post has been edited by Moogle Stiltzkin: Jul 28 2015, 01:18 AM

Jul 11 2015, 11:57 AM

Jul 11 2015, 11:57 AM

Quote

Quote

0.0534sec

0.0534sec

0.06

0.06

7 queries

7 queries

GZIP Disabled

GZIP Disabled