I had the pleasure of using an Intel X3320 server processor (equivalent to the desktop Q9300 desktop processor) although it was just a temporary bliss. My rig was not stable even at stock clocks regardless of any tweaks or bios update, probably because my mobo couldn’t support it. As such, that nice processor was returned.

However, during that brief period of owning this excellent CPU, I’ve decided to come up with a guide on the differences (or similarities) between server-class processors compared to their desktop equivalents. This is also in view of the frequently asked questions on the differences between these CPUs.

Disclaimer:

1) I’m not in the computer industry so forgive me for any mistakes. Computers are just my hobby

2) Any suggestions/comments/help is most welcomed, just pm me

3) I’m neither in Intel’s nor AMD’s camp. Sponsor me an AMD rig and I’ll do a guide for you

4) Information are scrubbed from the Internet.

Before we start

Some clarifications/definition:

Xeon processors = server/workstation processors

CPU = refers to the processor and not the whole rig/computer

Index:

1) Server/workstation processors and their counterparts

2) Comparison between Xeons and desktop CPUs

3) Differences of Xeon vs. Desktop CPU - the details

--a) VID

--b) Intel I/O Acceleration technolgoy

--c) Demand based switching

--d) PROCHOT, THERMTRIP, PECI, Intel TXT

4) Other differences - the "prefetchers"

5) CPU binning

6) Issues of compatibility with desktop motherboards

7) Conclusion

8) Other references

1) Server/workstation processors and their counterparts

I will only be concentrating certain Xeon processors especially the ones that can run on normal desktop computers.

Let's start by looking at the types of Xeon processors and their equivalent desktop processors.

Note that Xeon processors, like all Intel CPUs, are divided into specific "series". In this case, it is the 3000-series which includes the 3100-series, 3200-series and 3300-series.

As you can see from the table, the Xeon processors share similar specification as their desktop counterparts. For example:

X3360 = Q9550

X3350 = Q9450

X3320 = Q9300

This is not surprising as they actually share the same architecture and performance characteristics.

However, as you will come to realize later in this guide, there are actually some differences between these CPUs.

2) Comparison between Xeons and desktop CPUs

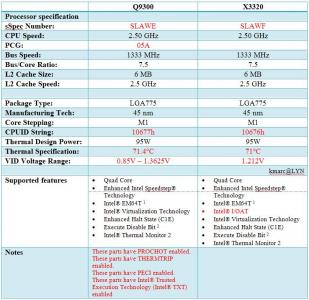

Now, let's take a look at individual CPUs. These specs were taken direct from Intel's website. I have arranged it in a table so that it is easier to compare.

The differences between the CPUs are highlighted in red.

Quad core - Q9450 vs X3350

X3350 specs : http://processorfinder.intel.com/details.aspx?sSpec=SLAX2

Q9450 specs : http://processorfinder.intel.com/details.aspx?sSpec=SLAWR

Major differences are:

1) VID:

- The X3350’s VID is fixed at 1.212v whereas the Q9450 can range from 0.85 – 1.3625v

2) Q9450 has PROCHOT, THERMTRIP, PECI and Intel TXT.

3) X3350 has Intel I/O AT and Demand based switching.

Note : Will discuss in detail about these differences in the next section

Quad core - Q9300 vs X3320

X3320 specs : http://processorfinder.intel.com/Details.aspx?sSpec=SLAWF

Q9300 specs : http://processorfinder.intel.com/Details.aspx?sSpec=SLAWE

Major differences are:

1) VID:

- The X3320’s VID is fixed at 1.212v whereas the Q9450 can range from 0.85 – 1.3625v

2) Q9300 has PROCHOT, THERMTRIP, PECI and Intel TXT.

3) X3320 has Intel I/O AT BUT does not have Demand based switching like the X3350.

Dual core - E8400 vs E3110

E3110 specs : http://processorfinder.intel.com/details.aspx?sSpec=SLAPM

E8400 specs : http://processorfinder.intel.com/Details.aspx?sSpec=SLAPL

1) VID:

- The E3110’s VID is 0.956v – 1.225v while the E8400’s VID is 0.85v – 1.3625v

2) E8400 has PROCHOT, THERMTRIP, PECI and Intel TXT

- It also has added features i.e. C2E and C4 state, which is not available for the Q9450/Q9330 quads

3) E3110 has Intel I/O AT BUT again does not have Demand based switching like the X3350.

As you can see, there ARE differences between the Xeons and desktop CPUs. These differences will be explained in detailed in the next section.

3) Differences of Xeon vs. Desktop CPU - the details

Here's a summary of the differences:

Let's go into the differences in detail.

a) VID

- I have no idea why the Xeon quad's VID is fixed at 1.212v. It is highly unlikely that this is true as my own X3320's VID is 1.15v.

- However, if you get a Q9450 with the lowest VID of 0.85v at stock, wouldn’t that be an excellent processor for overclocking? The only downside to this is that you have to hunt for such a proc with such a low VID.

- Since the Xeon quad's VID is fixed at 1.212v, I guess we won't know what is the lowest VID the proc can go.

- Although not confirmed by any scientific data, it is generally thought that processors with a lower VID would overclock better. This is because the have more headroom to overclock as the stock vcore is low to begin with.

--- However, many overclockers have found that for extreme overclocking, the differences in VID doesn't really matter as the proc is pushed to the limits. Guess that's a good thing for us as most of us are only normal overclockers

So, in terms of VID, the desktop CPUs would be the CPU of choice IF you can find one with such a low VID. This is in view of the unknown actual VIDs for the xeon.s

b) Intel I/O AT (Acceleration technology)

- Only present on the Xeon

Direct from Intel

QUOTE

This unique technology moves network data more efficiently through Intel Xeon processor-based servers for fast, scaleable, and reliable networking”

In other words, Intel I/O AT reduces CPU utilization for the processing of network packets which will increase throughput and decrease latency. This frees up the CPU to do other important things.

Performance

A primary benefit of Intel I/OAT is its ability to significantly reduce CPU overhead, freeing resources for more critical tasks. Intel I/OAT uses the server’s processors more efficiently by leveraging architectural improvements within the CPU, chipset, network controller, and firmware to minimize performance-limiting bottlenecks. Intel I/OAT accelerates TCP/IP processing, delivers data-movement efficiencies across the entire server platform, and minimizes system overhead.

Scalability

Intel I/OAT provides network acceleration that scales seamlessly across multiple Gigabit Ethernet (GbE) ports. It cost-effectively scales up to eight GbE ports and up to 10GbE, with power and thermal characteristics similar to those of a standard gigabit network adapter. TCP Offload Engine (TOE) solutions, in contrast, require a separate TOE card for each port, resulting in significant cost and thermal challenges for server platforms.

Reliability

Intel I/OAT is a safe and flexible choice because it is tightly integrated into popular operating systems such as Microsoft Windows Server* 2003 and Linux*, avoiding support risks associated with relying on third-party hardware vendors for network stack updates. Intel I/OAT also preserves critical network configurations such as teaming and failover, by maintaining control of the network stack processing within the CPU—where it belongs. This results in reduced support risks for IT departments.

In other words, Intel I/O AT reduces CPU utilization for the processing of network packets which will increase throughput and decrease latency. This frees up the CPU to do other important things.

Performance

A primary benefit of Intel I/OAT is its ability to significantly reduce CPU overhead, freeing resources for more critical tasks. Intel I/OAT uses the server’s processors more efficiently by leveraging architectural improvements within the CPU, chipset, network controller, and firmware to minimize performance-limiting bottlenecks. Intel I/OAT accelerates TCP/IP processing, delivers data-movement efficiencies across the entire server platform, and minimizes system overhead.

Scalability

Intel I/OAT provides network acceleration that scales seamlessly across multiple Gigabit Ethernet (GbE) ports. It cost-effectively scales up to eight GbE ports and up to 10GbE, with power and thermal characteristics similar to those of a standard gigabit network adapter. TCP Offload Engine (TOE) solutions, in contrast, require a separate TOE card for each port, resulting in significant cost and thermal challenges for server platforms.

Reliability

Intel I/OAT is a safe and flexible choice because it is tightly integrated into popular operating systems such as Microsoft Windows Server* 2003 and Linux*, avoiding support risks associated with relying on third-party hardware vendors for network stack updates. Intel I/OAT also preserves critical network configurations such as teaming and failover, by maintaining control of the network stack processing within the CPU—where it belongs. This results in reduced support risks for IT departments.

Features and benefits of I/O AT:

If you want more info, you can take a look at this video from Intel about the I/O AT : http://softwareblogs.intel.com/2008/07/29/...nd-opensolaris/

From the information above, it is obvious that the Intel I/O AT would benefit servers and workstations but probably not desktop computers.

c) Demand Based Switching (DBS)

- DBS is another power management feature that allows Xeon processors to reduce core frequency and voltage until the horsepower is actually required. The core frequency is reduced to a minimum of 2.8Ghz until the demands of an application or service force the processor back to its default clock speed.

- This can substantially reduce average power consumption for servers operating at typical data centre utilization rates. According to IBM, DBS has the capability to save customers 24% annually in power cost.

- DBS is only available for the 3.4 and 3.6Ghz versions of the Xeon processor (D0 stepping).

- Note that DBS will have the most impact on data centres (naturally, as it is a server processor!), and thus, do not benefit home users. Furthermore, home users have better power consumption features like the EIST and C1E power management features….

Wikipedia defined DBS in the most appropriate manner:

QUOTE

Intel DBS is a re-marketing of Intel’s Speedstep technology to the server marketplace.

Again, DBS would not benefit home users as it is naturally designed for servers/workstations. Furthermore, desktop processors has Intel's Speedstep technology and doesn't need DBS.

d) PROCHOT, THERMTRIP, PECI, TXT, C2E and C4 features

I can't be sure whether the Xeons have such features in their proc. However, based on their datasheet, these features are not mentioned at all and I would assumed that this is correct.

PROCHOT

Short for “Processor Hot”

Designed to activate the processor’s Thermal Control Circuit (TCC) when the processor’s has reached its maximum safe operating temperature. The TCC then reduces processor power consumption by modulating (i.e. starting and stopping) the internal processor core clocks, which subsequently results in a gradual decrease in core temperature.

The TCC will only activate when the Thermal Monitor is enabled.

In short, this feature will slow down the processor if it is too hot. Definitely important for overclockers, not because of too high overclocking, but rather may be due to inadequate cooling or improperly seated HSF.

THERMTRIP

Short for “Thermal Trip”

In the event of a catastrophic cooling failure, the processor will automatically shut down when the silicon has reached a temperature approximately 20’C above the maximum Tc. Activation of THERMTRIP indicates the processor junction temperature has reached a level beyond where permanent silicon damage may occur. Once activated, the processor will automatically shut down.

In short, this feature will shutdown the processor/computer once critical core temperature is reached. Also important for overclockers as explained above.

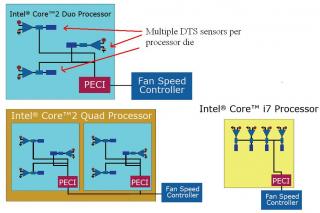

PECI (Platform Environment Control Interface)

A proprietary one-wire bus interface that provides a communication channel between the processor and chipset components to external monitoring devices.

The processor contains Digital Thermal Sensors (DTS) distributed throughout it’s die (i.e. temperature sensors in the die). PECI provides an interface to relay the highest DTS temperature within a die to external management devices for thermal/fan speed control.

Take a look at this diagram:

Source : http://intel.wingateweb.com/US08/published...MTS001_100r.pdf

In summary, PECI is definitely an important feature in desktop processors. Again, even though PECI is not stated in Xeon processors, I had the impression that PECI does exist in Xeons as I can still monitoring the core temperature of my X3320 and my CPU HSF will speed up and slow down based on the core temperature readings.

Until and unless somebody knows the answer to this, I have to assume that it is also present in Xeon processors based on my own personal experience.

Intel TXT (Intel Trusted Execution Technology)

A security technology to help protect against software-based attacks. It is Intel’s safer computing initiative that defines a set of hardware enhancements that interoperate with an Intel TXT-enabled operating system.

Direct from Wikipedia:

QUOTE

Intel Trusted Execution Technology (Intel TXT) is a hardware extension to some of Intel's microprocessors and respective chipsets, intended to provide users and organizations (governments, enterprises, corporations, universities, etc.) with a higher level of trusting while accessing, modifying or creating sensitive data and code. Intel claims that it will be very useful, especially in the business world, as a way to defend against software-based attacks aimed at stealing sensitive information.

Definitely a must for desktop processors IF it protects your computer from hackers. However, there seems to be a disadvantage to this technology. Thanks to our forumer ktek for this link

C2E and C4

These terms refers to Intel’s processor low-power states (C1, C2, C3 and C4). C0 is the normal operating state of a processor.

In order to conserve energy and reduce thermal load, Intel’s processor include the option of operating in several operating “states”. During the times when the processor is working hard, the greatest processor clock frequency that is available may be selected to enhance processor throughput. However, during the times when instructions are not being processed, the processor may transfer to one of several available low-power states. In these states, the processor clock frequency may be reduced or completely stopped.

Also important for desktop processors.

4) Other differences - the "prefetchers"

Unconfirmed reports have indicated that there are differences in the prefetchers between the Xeon and desktop CPUs.

Direct quote from this link : http://www.anandtech.com/printarticle.aspx?i=3216

QUOTE

Despite using a Xeon socket, the Core 2 Extreme QX9775 isn't a Xeon. It turns out there are some very subtle differences between Core 2 and Xeon processors, even if they're based on the same core. Intel tunes the prefetchers on Xeon and Core 2 CPUs differently so unlike the Xeon 5365 used in its V8 platform, the QX9775 is identical in every way to desktop Core 2 processors - the only difference being pinout.

Intel wouldn't give us any more information on how the prefetchers are different, but we suspect the algorithms are tuned according to the typical applications Xeons find themselves running vs. where most Core 2s end up.

Intel wouldn't give us any more information on how the prefetchers are different, but we suspect the algorithms are tuned according to the typical applications Xeons find themselves running vs. where most Core 2s end up.

Another quote from a forumer : http://forum.ncix.com/forums/index.php?mod...ber=5&subpage=2

QUOTE

As Xeons are aimed at servers and workstations, the four prefetchers are tuned for these sorts of applications, rather than standard desktop applications and games

As stated by Anandtech, Intel is not providing more information on the prefetchers and as such, there is no point in speculating further on this matter.

5) CPU binning

"CPU binning" or "speed binning of processors" is basically a process where CPUs are tested and then divided into different bins (i.e. groups) based on their capabilities in terms of speed.

Note : I can't seem to find any good sources of chip binning.

Why is CPU binning important in this guide? Well, it is well-known that server-class processors goes through a more "vigorous" testing compared to desktop processors. It is thought that Xeons (compared to their equivalent counterpart):

1) Higher binned with less power requirements (run at lower operating voltages)

2) Undergo additional validation testing

Due to the above factors, it is said that CPUs that make the grade for server/workstations are the best of the breed. This in turn, translates to a more reliable CPU (they are supposed to run 24/7!) that overclocks better (although not necessarily so).

As an analogue, just imagine that you have 100 runners for the 100m olympic games.

- Imagine that you want to divide them into 10 groups, with group 1 with the best runners and group 10 with slowest runners.

- How would you divide them? Naturally, you would categorize them based on their times, e.g. group 1 below 10 seconds, group 2 from 10.0 to 10.5 seconds, group 3, 10.5-11.0 seconds, and so on and so forth

- In the end, you'll have 10 groups which, for marketing purposes, you give them some names:

---Group 1 is QX9650

---Group 2 is QX9450

---Group 3 is X3360

---Group 4 is Q9450

---Group 5 is X3320

---Group 6 is Q9300

---Group 7 is Q8200

---Group 10 is also grouped in special bin - the dust bin!!!!

Now, just substitute the groups for bins and you'll get the idea.

As such, the higher binned processors are better as they are capable of higher speeds.

How are the bins graded?

Again, there is no good source of information on this.

However, this article on the new exciting feature of "Turbo Mode" in Intel's upcoming CPU "Core i7" (which is nehalem) does give a hint that a "bin" translates to a speed difference of 200-300mhz.

QUOTE

The latest revelation is Turbo Mode. According to IDF stalwart and all round Intel evangelist, Pat Gelsinger, Turbo Mode allows individual cores in its upcoming Core i7 desktop CPU to be entirely switched off.

That in turn allows additional power to be channelled to the remaining cores. The idea is to boost performance in applications that do not make use of all four of Core i7's processor cores.

Intel says that for single threaded applications, the technique allows the speed of a single core to be boosted by two "bins". In layman's terms, a bin translates into around 200-300MHz.

That in turn allows additional power to be channelled to the remaining cores. The idea is to boost performance in applications that do not make use of all four of Core i7's processor cores.

Intel says that for single threaded applications, the technique allows the speed of a single core to be boosted by two "bins". In layman's terms, a bin translates into around 200-300MHz.

Source : http://www.techradar.com/news/computing/id...e-i7-cpu-455108

Other sources

If you want to know more, the following are excerpts of what chip binning is about.

QUOTE

It’s essentially a practice in which chip manufacturers design a chip to hit a targeted speed grade, say for example 2GHz, but after the chips are manufactured and tested, manufactures find some of the chips perform at the targeted speed grade of 2GHz, some perform at higher than 2GHz, and even more perform at lower speeds than that targeted specification number (some of those lower performing chips may perform at 1.8 GHz, others at 1.5 GHz and some at 1 GHz…and lower).

But instead of throwing out the chips that didn’t hit the targeted performance specification, some semiconductor vendors, especially microprocessor vendors, sell most of them to us, the consumer. They simply put them in bins according to speed grade and price them accordingly. In processors for example, the processors that are the highest speed, essentially overclocked processors, traditionally sell for a premium and go into gaming machines. The ones that hit targeted performance go into high end home computing and business PCs. The ones that didn’t hit their targeted performance go into lower cost PCs and the very very lowest ones get thrown out. Very little is wasted. That’s one of the reasons processor companies do so well, they get to sell most of their inventories. Other types of chips, like ASICs, have to hit performance grades and meet system specifications or customers don’t buy them.

But instead of throwing out the chips that didn’t hit the targeted performance specification, some semiconductor vendors, especially microprocessor vendors, sell most of them to us, the consumer. They simply put them in bins according to speed grade and price them accordingly. In processors for example, the processors that are the highest speed, essentially overclocked processors, traditionally sell for a premium and go into gaming machines. The ones that hit targeted performance go into high end home computing and business PCs. The ones that didn’t hit their targeted performance go into lower cost PCs and the very very lowest ones get thrown out. Very little is wasted. That’s one of the reasons processor companies do so well, they get to sell most of their inventories. Other types of chips, like ASICs, have to hit performance grades and meet system specifications or customers don’t buy them.

Source from www.edn.com : http://www.edn.com/blog/1480000148/post/1240018324.html

QUOTE

On every lot of wafers produced, regardless of manufacturer, there is a distribution of chips- it may be Gaussian, it may be Poisson, it may be something else- and it is always nearly skewed in some vector or another (fmax at the expense of yield, power at the expense of fmax,...). Since we are discussing fmax, there are typically two options available- produce standard lots, and get low bin splits (small percentages of the lot) in the highest speed bin, but good overall die yield, with lots of lower speed bins. Or... run a skew lot, where top bin split is much higher, but die yield is likely lower, typically due to higher leakage (due to CDs, implants, and a number of other process tricks that yield speed).

Economically speaking, this means that introducing a new top bin would be for one of two reasons:

1. Defensive purposes: competitive environment requires a faster chop to compete.

2. Offensive purposes: competitive environment can be changed by introing a faster part. This could be viewed from the "final nail" viewpoint.

In the case of defensive purposes, there isn't a lot a company can do- if their hand is forced, it is forced. You do what you need to to compete. In the second scenario however, there are additional considerations. If the new top bin is not required to compete, then maximizing profit/revenue behavior should drive the release decision. If by releasing a new top bin, I reduce the amount of $1K parts I can sell, and take my current performance leader and whack the price in half- have I sabotaged myself? If I can get high enough yields of top bin, this is an easy decision- crush the competition. But if I can't, and I can only generate a few %, then why do it?

I believe the latter is the case at Intel today. If Intel HAD to release a higher bin, they would do so. Manufacturing capacity what it is, they can afford to tank yield on some % of skew lots for more higher bin parts. They can even afford to put some "better than stock" cooling on said parts for a few $$/SKU. But the marketplace isn't demanding it, because AMD is currently not executing- and therefore Intel is maximizing profit.

Economically speaking, this means that introducing a new top bin would be for one of two reasons:

1. Defensive purposes: competitive environment requires a faster chop to compete.

2. Offensive purposes: competitive environment can be changed by introing a faster part. This could be viewed from the "final nail" viewpoint.

In the case of defensive purposes, there isn't a lot a company can do- if their hand is forced, it is forced. You do what you need to to compete. In the second scenario however, there are additional considerations. If the new top bin is not required to compete, then maximizing profit/revenue behavior should drive the release decision. If by releasing a new top bin, I reduce the amount of $1K parts I can sell, and take my current performance leader and whack the price in half- have I sabotaged myself? If I can get high enough yields of top bin, this is an easy decision- crush the competition. But if I can't, and I can only generate a few %, then why do it?

I believe the latter is the case at Intel today. If Intel HAD to release a higher bin, they would do so. Manufacturing capacity what it is, they can afford to tank yield on some % of skew lots for more higher bin parts. They can even afford to put some "better than stock" cooling on said parts for a few $$/SKU. But the marketplace isn't demanding it, because AMD is currently not executing- and therefore Intel is maximizing profit.

Quoted from Dr. Yield here : http://scientiasblog.blogspot.com/2007/07/...hz-barrier.html

6) Issues of compatibility with desktop motherboards

It is important to realize that Xeons are manufactured for servers and workstations. It is said that the micro-code of Xeons are very different and as such, not all motherboards will work correctly with the Xeons if they don't recognize the micro-code of the CPU.

However, most new motherboards especially the higher end and better quality ones should have a proper BIOS that supports the Xeon processors. For relatively older motherboards that supports 45nm processors, a BIOS update to the latest one that supports 45nm processors should solve the problem.

I, on the other hand, had the unfortunate experience of having a motherboard that is not compatible with my previous X3320 even with an updated bios.

7) Conclusion

I wished there were better sources of information to base this guide on but alas, this is not to be. However, I will just make some conclusions based on available information. If anybody comes across any good information on this topic, kindly inform me and I will include it in here.

So, after presenting all available informations, I guess you want answers to a few questions:

1) Are Xeons EXACTLY the same as their desktop counterparts?

- I'm sure you have seen forums where forumers say something like this "THEY ARE EXACTLY THE SAME!". And the tone of their reply indicated that they are 110% sure!!

- Well, as you can see from above, they are NOT exactly the same. Maybe 99% identical?

2) Are there any performance differences for normal users/gamers?

- As far as anybody can tell, they perform the same in games and in any normal everyday applications

- Actually, I can't find any direct comparisons between the Xeons and their desktop counterparts

3) Are the Xeons really good overclockers?

- In general, Xeons should overclock better compared to desktop CPUs due to their lower voltages and higher binning.

- This is not necessary so but we're talking about overall overclocking capabilities between each class

- In addition, overclockers wants would want better reliability of the CPUs which server processors can provide as they undergo extra testing and are supposed to run 24/7.

- Again, that doesn't mean that desktop processors are less reliable for home users

4) Should I get a Xeon or a proper desktop CPU?

- In my opinion, if you want to overclock, you should get a Xeon rather that its desktop counterpart PROVIDED that your motherboard can support it.

- You may argue that you can find a good desktop processor with low VID and good overclockability. Well, the same can be said for the Xeon processor right?

So, based on all available information, I would go for a Xeon processor rather than its desktop equivalent as it has more potential to be a good overclocker, relatively more reliable and probably more or less similarly prices compared to their desktop equivalent.

Of course, there is absolutely nothing wrong in getting a desktop processor!!!

8) Other references

General

1) http://en.wikipedia.org/wiki/Xeon

E3110 review

http://www.circuitremix.com/index.php?q=node/122

X3320 review

http://techreport.com/articles.x/14555

This post has been edited by kmarc: Aug 27 2008, 08:43 PM

Aug 24 2008, 10:02 AM, updated 18y ago

Aug 24 2008, 10:02 AM, updated 18y ago Quote

Quote 0.0180sec

0.0180sec

0.50

0.50

7 queries

7 queries

GZIP Disabled

GZIP Disabled