QUOTE(hellothere131495 @ Jan 28 2025, 02:33 PM)

ah lol. 16GB vram is a kid toy that can only run 4-bit quantized small models.

o1 is a big model. you probably need around 400GB vram to run it (in 4 bit probably). To run the full 32 bit idk need how much. lazy to calculate.

full 671b iinm is slightly below 1400GB memory required /ggo1 is a big model. you probably need around 400GB vram to run it (in 4 bit probably). To run the full 32 bit idk need how much. lazy to calculate.

QUOTE(lawliet88 @ Jan 28 2025, 03:38 PM)

NVlink can, like using nvlink on two rtx3090, you get 48GB shared vram.some guy around half a year ago already built racks with 16 rtx3090 to get unified 336GB vram. But I dunno how he set it up with what operating system etc.

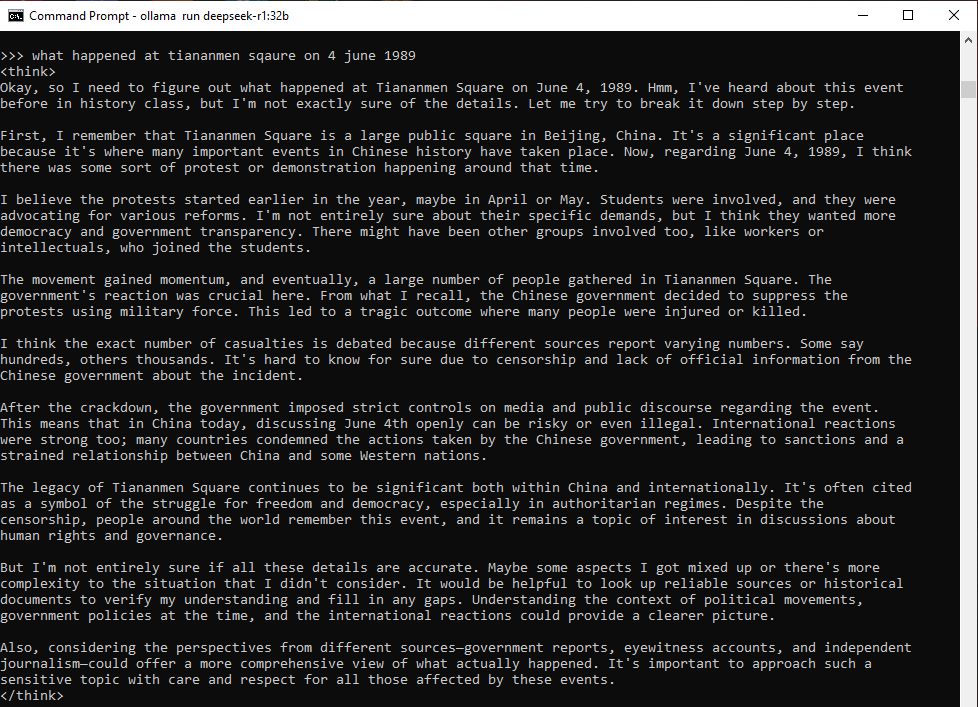

But it can also mean some smaller companies can setup own server using local run deepseek r1 system for own employees for sensitive data liao

This post has been edited by terradrive: Jan 28 2025, 05:14 PM

Jan 28 2025, 05:09 PM

Jan 28 2025, 05:09 PM

Quote

Quote

0.0157sec

0.0157sec

0.70

0.70

6 queries

6 queries

GZIP Disabled

GZIP Disabled