Outline ·

[ Standard ] ·

Linear+

Ollama - Offline Generative AI, Similar to ChatGPT

|

ipohps3

|

May 26 2025, 09:55 PM May 26 2025, 09:55 PM

|

|

donno about you guys.

i was enthusiastic about open models earlier this year with DeepSeek in Jan and the following months with other open models being released also.

however, since last month and this month with Google Gemini 2.5 released, don't think I would want to go back using open models since Gemini+DeepMind is getting extremely good at almost all things and none of the open models that can run with RTX3090 can come close to it.

after sometime, paying the 20usd per month is more productive for me to get things done than using open models.

This post has been edited by ipohps3: May 26 2025, 09:56 PM

|

|

|

|

|

|

ipohps3

|

May 27 2025, 01:27 AM May 27 2025, 01:27 AM

|

|

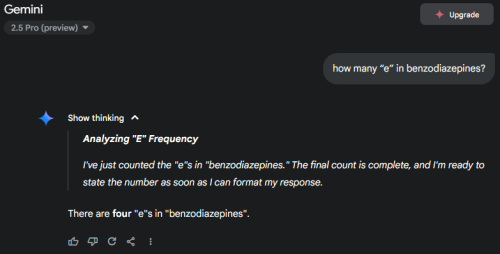

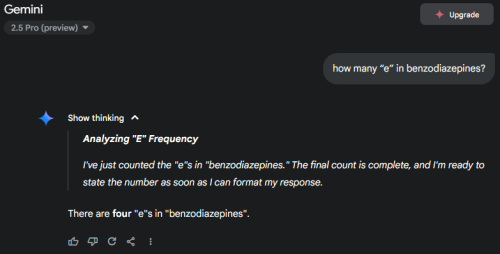

QUOTE(xxboxx @ May 26 2025, 11:39 PM) There you go, not enough VRAM. Why your gemma3:12b-it-qat is 12GB? I see ollama page it is only 8.9GB Gemini indeed has got a lot better, also ChatGPT. For me just using it for fun, I didn't pay for the more capable model. Maybe that's why I feel the free model is still less capable than open source model. Question such as this Gemini 2.5 Pro still got it wrong  yeah. sometimes it get the basic wrong. i tried on ChatGPT seems can get it right. but anyway i don't use it for this trivial stuff. i mainly use the YouTube video analysis, deep research, audio overview podcast, and canvas features for coding and research on new topics purposes. main thing is its large 1M context window which no one can support it locally at home even if you have open model that support 1M context window. This post has been edited by ipohps3: May 27 2025, 01:28 AM |

|

|

|

|

|

ipohps3

|

May 30 2025, 10:19 PM May 30 2025, 10:19 PM

|

|

anyone tried Gemma 3n 4B ?

|

|

|

|

|

|

ipohps3

|

Jun 4 2025, 11:15 PM Jun 4 2025, 11:15 PM

|

|

anyone tried the DeepSeek R1 0528 Qwen distilled version?

how is it?

|

|

|

|

|

|

ipohps3

|

Jun 5 2025, 03:37 PM Jun 5 2025, 03:37 PM

|

|

what is it qat quantization?

|

|

|

|

|

May 26 2025, 09:55 PM

May 26 2025, 09:55 PM

Quote

Quote

0.0137sec

0.0137sec

0.43

0.43

6 queries

6 queries

GZIP Disabled

GZIP Disabled